为什么需要服务发现

在K8S集群中,POD有以下特性:

1、服务动态性强

容器在k8s中迁移会导致POD的IP地址变化

2、更新发布频繁

版本迭代快,新旧POD的IP地址会不同

3、支持自动伸缩

大促或流量高峰需要动态伸缩,IP地址会动态增减

service资源解决POD服务发现:

为了解决pod地址变化的问题,需要部署service资源,用service资源代理后端pod,通过暴露service资源的固定地址(集群IP),来解决以上POD资源变化产生的IP变动问题

那service资源的服务发现呢?

service资源提供了一个不变的集群IP供外部访问

1、IP地址毕竟难以记忆

2、service资源可能也会被销毁和创建

3、能不能将service资源名称和service暴露的集群网络IP对于

4、类似域名与IP关系,则只需记服务名就能自动匹配服务IP

5、岂不就达到了service服务的自动发现

在k8s中,coredns就是为了解决以上问题。

实现K8S里DNS功能的插件

Kube-dns

Coredns

一、配置nginx,用于存放k8s yaml的资源目录

1、创建nginx配置文件

vim /etc/nginx/conf.d/k8s-yaml.example.com

server {

listen 80;

server_name k8s-yaml.example.com;

location / {

autoindex on;

default_type text/plain;

root /data/k8s-yaml;

}

}

2、创建存放目录

mkdir /data/k8s-yaml

mkdir /data/k8s-yaml/coredns

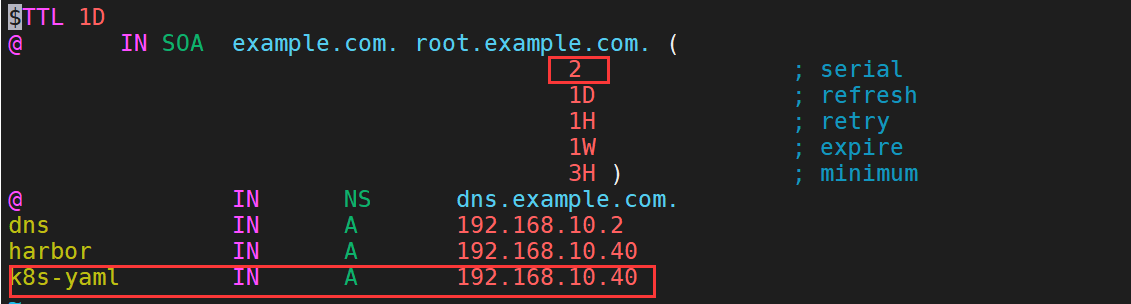

3、配置dns服务进行正向解析配置文件

4、重启dns服务器

systemctl restart named

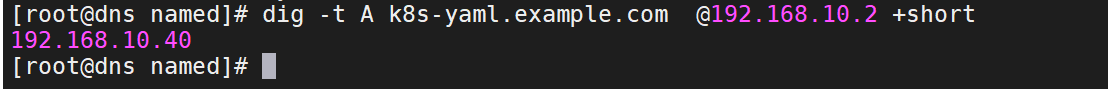

5、检测是否正常解析

dig -t A k8s-yaml.example.com @192.168.10.2 +short

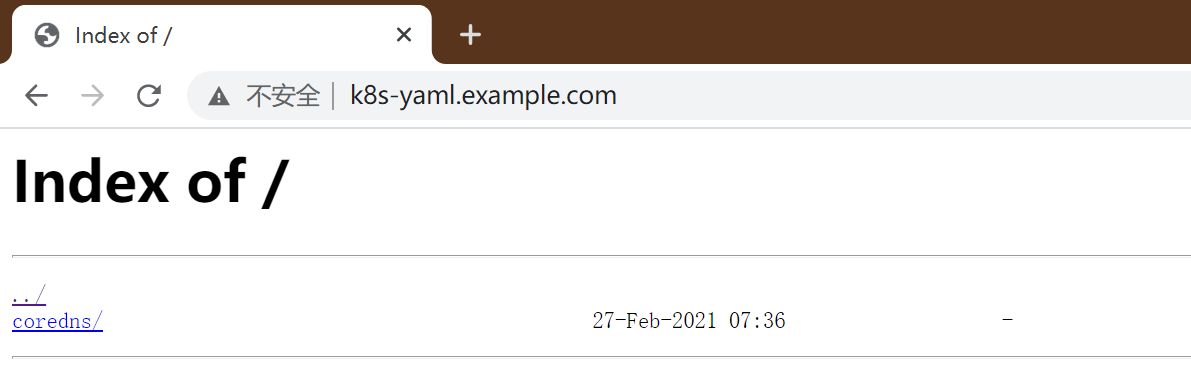

6、在浏览器中查看

二、部署coredns(这边以容器的方式安装)

下载地址:https://github.com/coredns/coredns

1、拉取coredns镜像源

docker pull coredns/coredns

2、将拉取的coredns上传到私有镜像源

docker tag 3885a5b7f138 harbor.example.com/public/coredns

docker push harbor.example.com/public/coredns

3、配置资源配置清单(资源配置清单放在之前nginx配置的目录下)

rbac集群权限清单

cat >/data/k8s-yaml/coredns/rbac.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

EOF

configmap配置清单

cat >/data/k8s-yaml/coredns/cm.yaml <<EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

log

health

ready

kubernetes cluster.local 10.10.0.0/16 #service资源cluster地址

forward . 192.168.10.2 #上级DNS地址

cache 30

loop

reload

loadbalance

}

EOF

depoly控制器清单

cat >/data/k8s-yaml/coredns/dp.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/name: "CoreDNS"

spec:

replicas: 1

selector:

matchLabels:

k8s-app: coredns

template:

metadata:

labels:

k8s-app: coredns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

containers:

- name: coredns

image: harbor.example.com/public/coredns:latest

args:

- -conf

- /etc/coredns/Corefile

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

EOF

Service资源清单

cat >/data/k8s-yaml/coredns/svc.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: coredns

clusterIP: 10.10.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

- name: metrics

port: 9153

protocol: TCP

EOF

4、使用配置清单

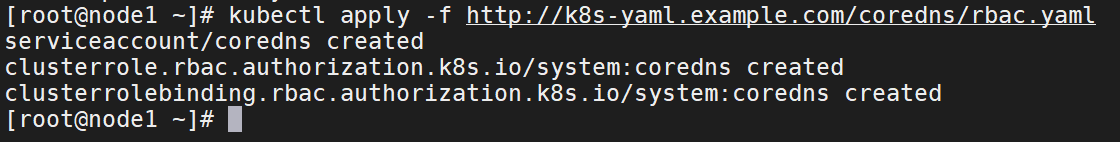

rbac集群权限清单

kubectl apply -f http://k8s-yaml.example.com/coredns/rbac.yaml

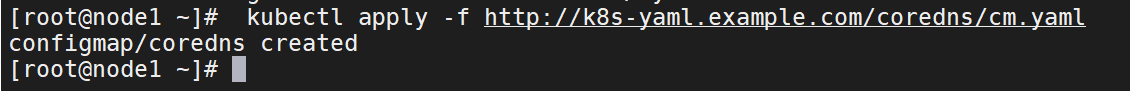

configmap配置清单

kubectl apply -f http://k8s-yaml.example.com/coredns/cm.yaml

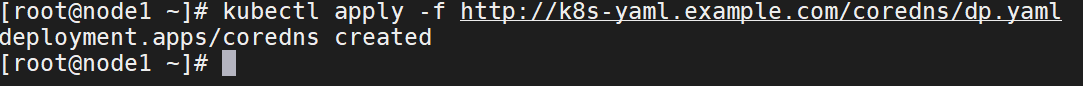

depoly控制器清单

kubectl apply -f http://k8s-yaml.example.com/coredns/dp.yaml

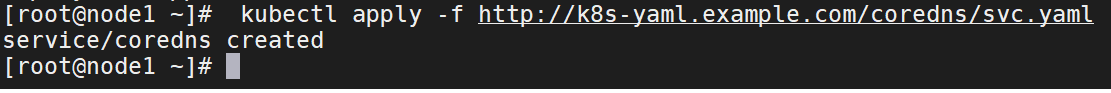

Service资源清单

kubectl apply -f http://k8s-yaml.example.com/coredns/svc.yaml

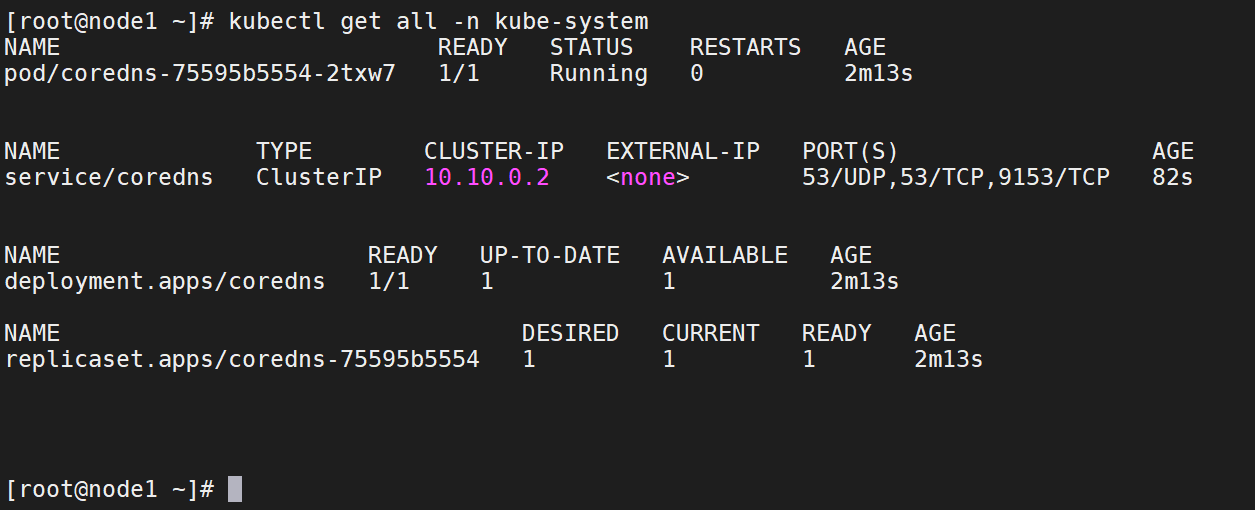

5、查看coredns状态

kubectl get all -n kube-system

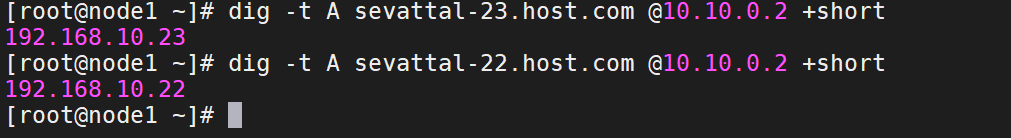

6、检测coredns是否生效

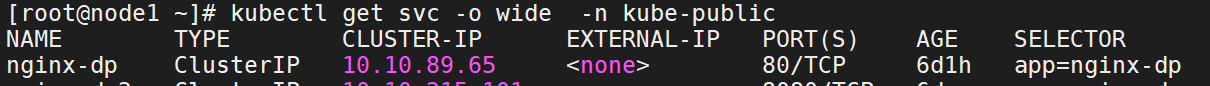

7、生成一个service,并暴露一个端口

8、测试使用service服务名能否正向解析成功。

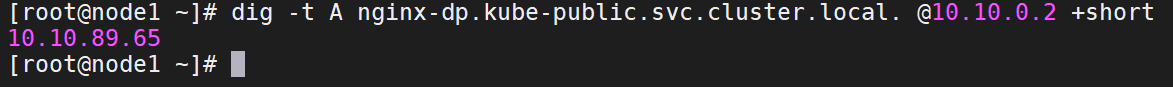

dig -t A nginx-dp.kube-public.svc.cluster.local. @10.10.0.2 +short