K8S 二进制部署

参考:https://blog.csdn.net/fanjianhai/article/details/109444648

参考:https://www.bilibili.com/video/BV1PJ411h7Sw?p=25

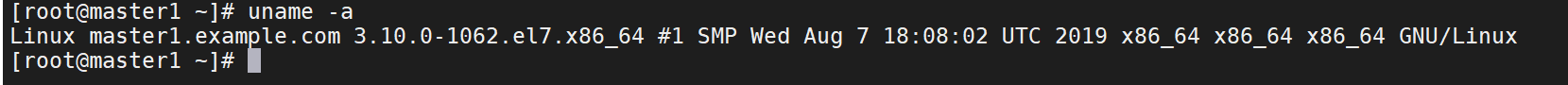

安装部署环境说明(本文实验环境为CentOS7)

Linux 内核要求

注:要在3.0以上(Docker 对于Linux的内核要求),只要是内核达到3.0及其以上的Linux版本都可以,可选择自己熟悉的Linux发行版。由于我比较熟悉CentOS和红帽,本文采用CentOS搭建。

IP地址分配

| 主机名称 | 主机IP | VIP | 业务域名 | 主机域名 | 容器IP | 作用 |

|---|---|---|---|---|---|---|

| Master1 | 192.168.10.11 | 192.168.10.10 | sevattal-11.host.com | Proxy(L4,L7)master,反向代理 | ||

| Master2 | 192.168.10.12 | 192.168.10.10 | sevattal-12.host.com | Proxy(L4,L7)standby01,反向代理 | ||

| Master3 | 192.168.10.13 | 192.168.10.10 | sevattal-13.host.com | Proxy(L4,L7)standby02,反向代理 | ||

| Node1 | 192.168.10.21 | sevattal-21.host.com | 172.7.21.1/24 | etcd,apiserver,kube-controller-manager,kube-scheduler,kubelet | ||

| Node2 | 192.168.10.22 | sevattal-22.host.com | 172.7.22.1/24 | etcd,apiserver,kube-controller-manager,kube-scheduler,kubelet | ||

| Node3 | 192.168.10.23 | sevattal-23.host.com | 172.7.23.1/24 | etcd,apiserver,kube-controller-manager,kube-scheduler,kubelet | ||

| Warehouse | 192.168.10.40 | harbor.example.com | sevattal-40.host.com | 172.7.40.1/24 | harbor镜像仓库 | |

| CFSSL | 192.168.10.30 | sevattal-30.host.com | CFSSL证书认证 | |||

| DNS | 192.168.10.2 | dns.example.com | dns.host.com | DNS服务 |

网段划分

Service网络:10.10.0.0/16

Pod网络:172.7.0.0/16

节点网络:192.168.10.0/24

一、前期服务器准备

以下操作在所有主机上操作

基于主机名通信:/etc/hosts

192.168.10.10 master.example.com master

192.168.10.11 master1.example.com master1

192.168.10.12 master2.example.com master2

192.168.10.13 master3.example.com master3

192.168.10.21 node1.example.com node1

192.168.10.22 node2.example.com node2

192.168.10.23 node3.example.com node3

192.168.10.30 tls.example.com tls

192.168.10.40 warehouse.example.com warehouse

所有K8S主机关闭selinux

setenforce 0

vim /etc/selinux/config

SELINUX=disabled

所有K8S主机关闭防火墙

systemctl disable firewalld

systemctl stop firewalld

关闭swapoff分区

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

时间同步(这边使用的是ntpdate)

yum install ntpdate

echo '*/30 * * * * /usr/sbin/ntpdate 192.168.10.1 >/dev/null 2>&1' > /tmp/crontab2.tmp

crontab /tmp/crontab2.tmp

systemctl enable crond

systemctl start crond

时间同步建议使用chronyd

调整yum源,安装epel-release

yum install epel-release -y

安装必要的工具(用于后期维护)

yum install wget net-tools telnet tree nmap sysstat lrzsz dos2unix bind-utils -y

二、在DNS主机上安装DNS服务

以下操作在DNS主机上运行

1、安装DNS服务

yum install bind -y

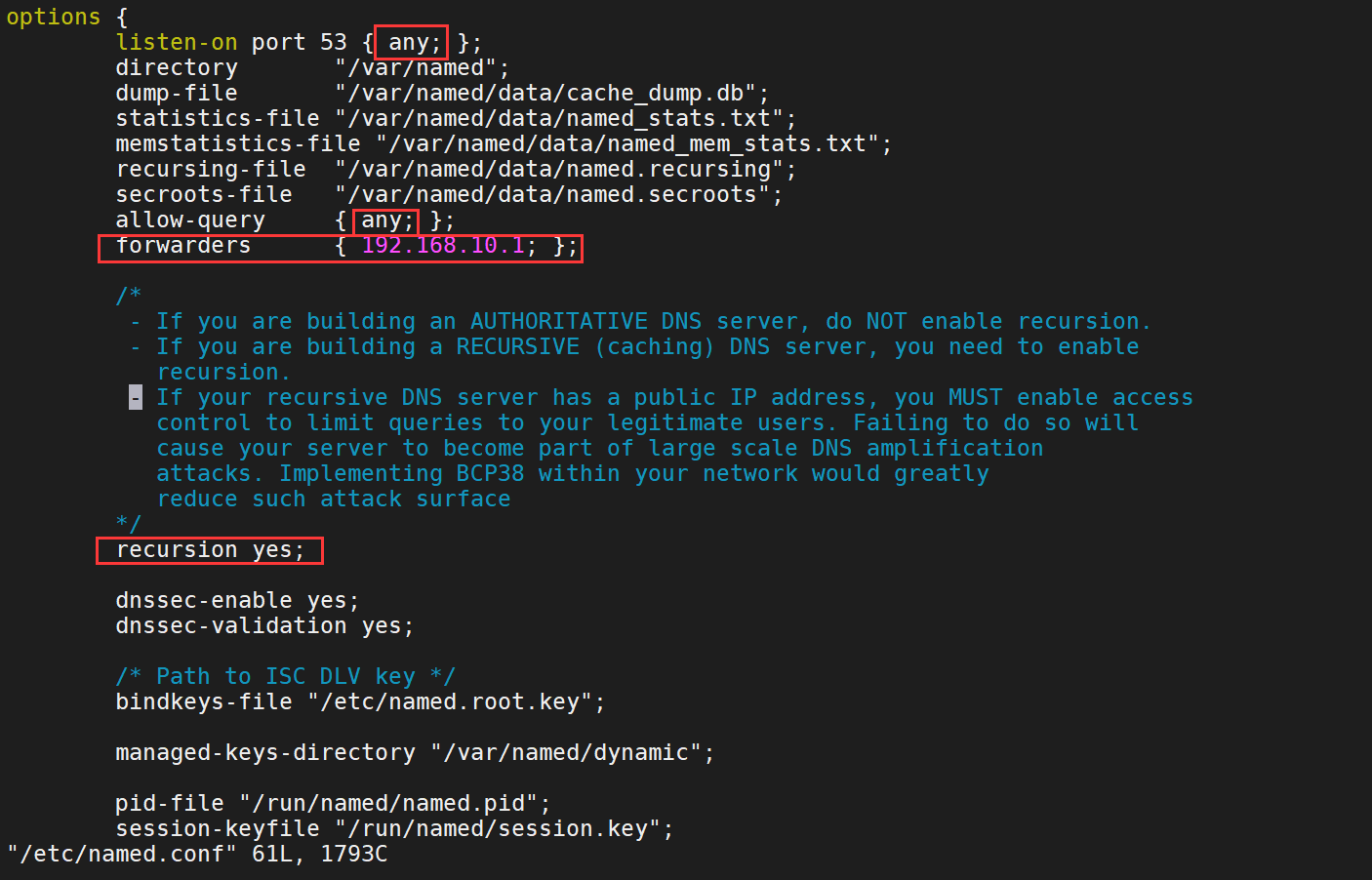

2、/etc/named.conf配置文件修改

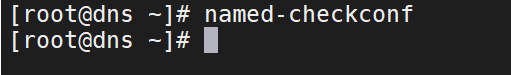

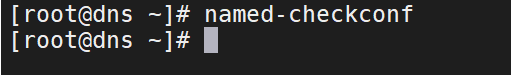

3、检查配置文件是否正确name-checkconf

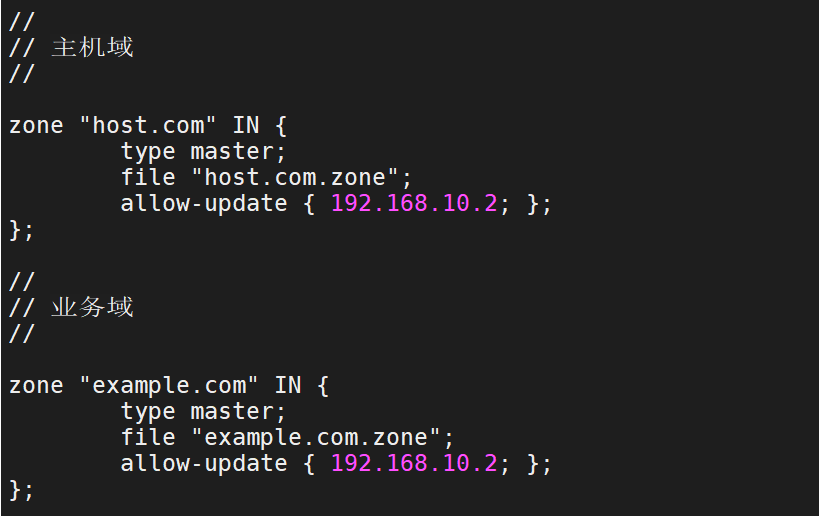

4、配置区域配置文件/etc/named/named.rfc1912.zones

主机域与业务域最好分开使用,这样方便维护。主机域一般都是使用的假域,用于本地使用。

//

// 主机域

//

zone "host.com" IN {

type master;

file "host.com.zone";

allow-update { 192.168.10.2; };

};

//

// 业务域

//

zone "example.com" IN {

type master;

file "example.com.zone";

allow-update { 192.168.10.2; };

};

检查配置文件是否正确name-checkconf

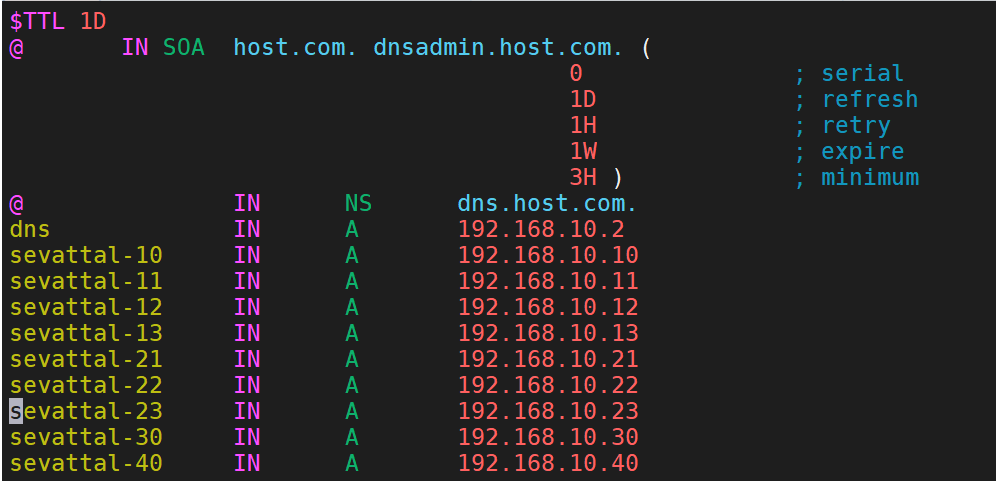

6、配置host.com.zone 、example.com.zone文件

配置主机域/var/named/host.com.zone (没有这个文件可以复制named.empty进行更改)

$TTL 1D

@ IN SOA host.com. dnsadmin.host.com. (

0 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

@ IN NS dns.host.com.

dns IN A 192.168.10.2

sevattal-10 IN A 192.168.10.10

sevattal-11 IN A 192.168.10.11

sevattal-12 IN A 192.168.10.12

sevattal-13 IN A 192.168.10.13

sevattal-21 IN A 192.168.10.21

sevattal-22 IN A 192.168.10.22

sevattal-23 IN A 192.168.10.23

sevattal-30 IN A 192.168.10.30

sevattal-40 IN A 192.168.10.40

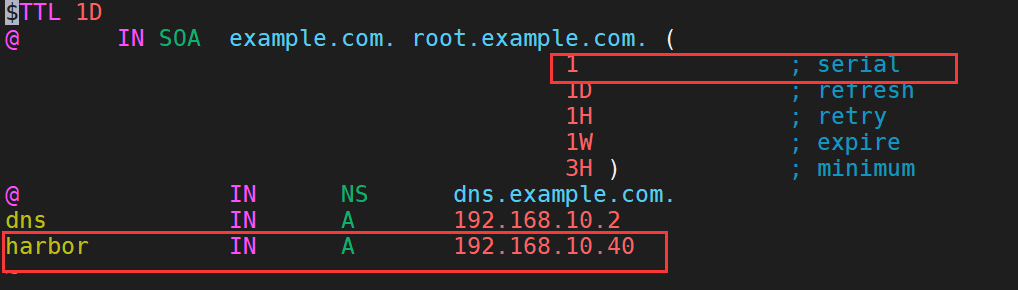

配置主机域/var/named/example.com.zone (没有这个文件可以复制named.empty进行更改)

$TTL 1D

@ IN SOA example.com. root.example.com. (

0 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

@ IN NS dns.example.com.

dns IN A 192.168.10.2

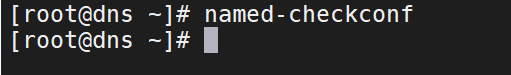

检查配置文件是否正确name-checkconf

7、重启named服务

systemctl restart named

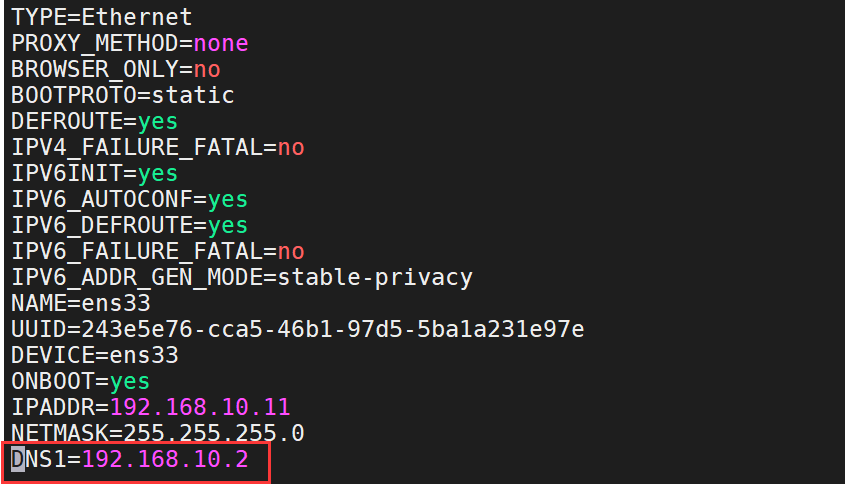

8、更改其他所有机器的DNS指向

修改网卡配置文件ifcfg-ens33

vim /etc/sysconfig/network-scripts/ifcfg-ens33

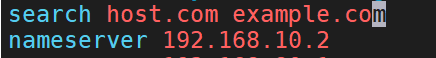

修改/etc/resolv.conf

vim /etc/resolv.conf

重启网卡

systemctl restart network

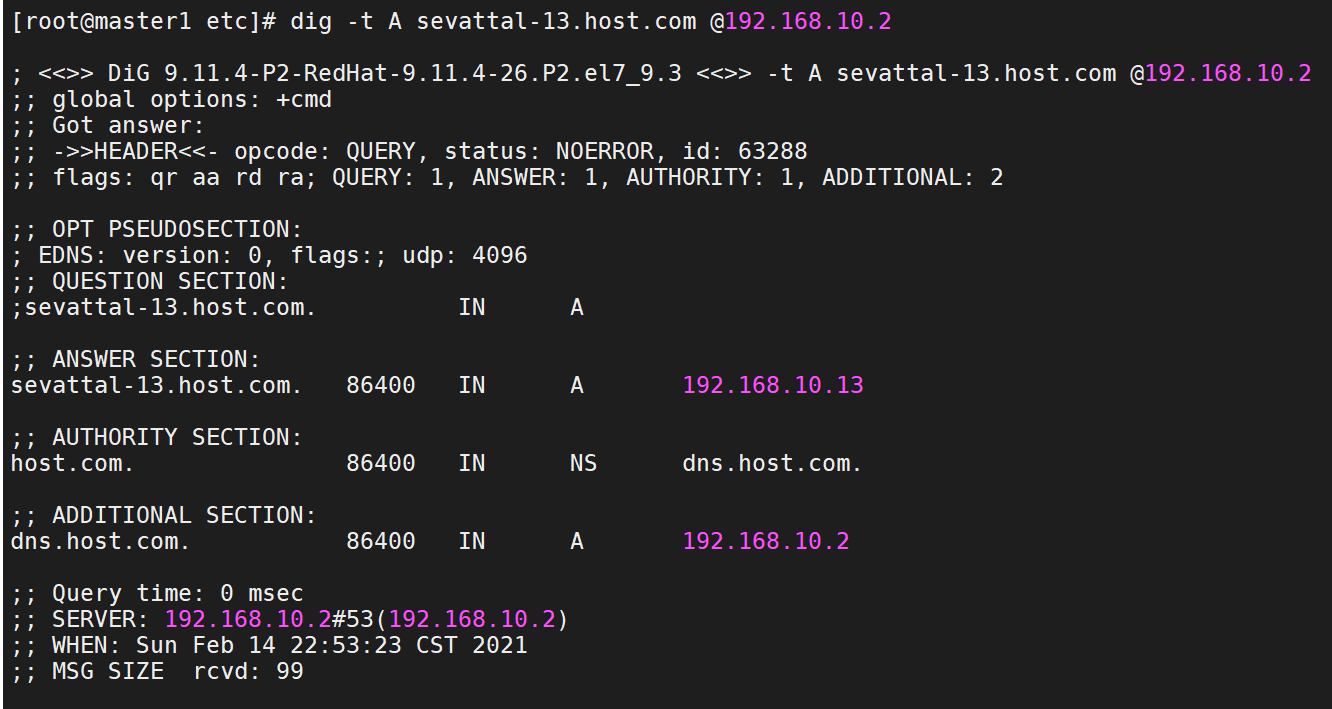

9、查看DNS是否生效

dig -t A sevattal-13.host.com @192.168.10.2

三、CFSSL安装即签发证书

1、下载CFSSL安装包(网址:http://pkg.cfssl.org/)

wget http://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/bin/cfssl

wget http://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/bin/cfssl-json

wget http://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/bin/cfssl-certinfo

2、给cfssl文件可执行权限

chmod +x /usr/bin/cfssl*

3、创建证书文件目录

mkdir /opt/certs

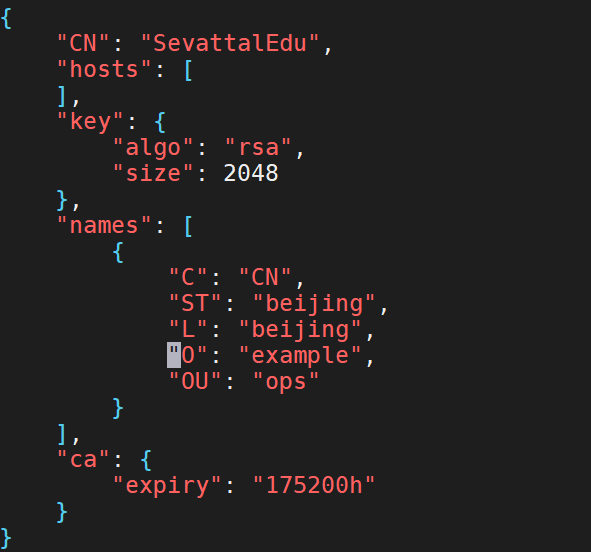

4、创建在CA证书文件/opt/certs/ca-csr.json

{

"CN": "SevattalEdu",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "example",

"OU": "ops"

}

],

"ca": {

"expiry": "175200h"

}

}

附:

CN:机构名称,浏览器使用该字段验证网站是否合法,一般写的是域名。

algo:算法

size:长度

C:国家

ST:州、省

L:地址、城市

O:组织名称、公司名称

OU:组织单位名称、公司部门

expiry:过期时间

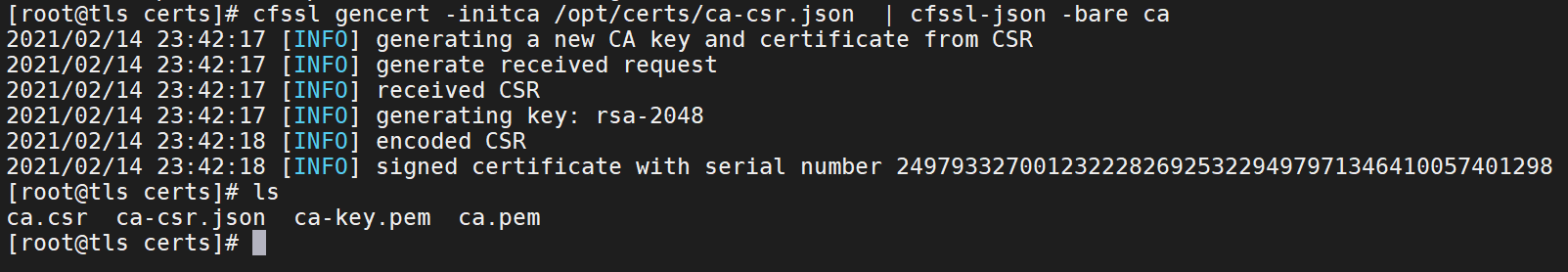

5、签证书,需要到/opt/certs目录下

cd /opt/certscfssl gencert -initca /opt/certs/ca-csr.json | cfssl-json -bare ca

四、K8S几台机器安装Docker-CE环境

需要安装docker-ce的主机分别为:node1,node2,node3,warehouse

1、Docker官网的脚本命令(当然也可以使用别的方法,需要装的是Docker-CE)

curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun

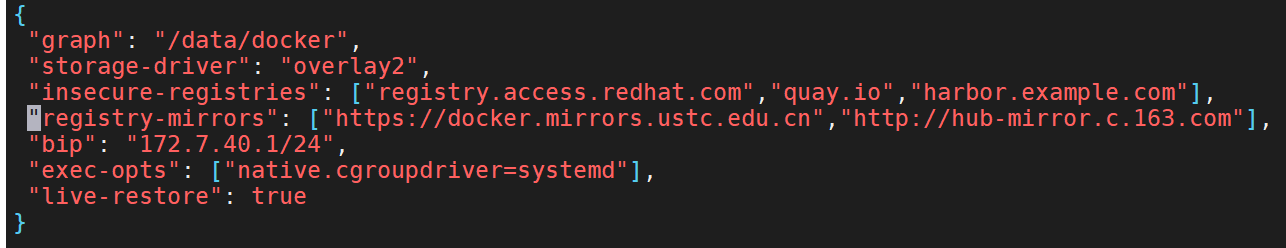

2、创建/etc/docker/daemon.json配置文件,若没有该文件即目录,则手动创建

{

"graph": "/data/docker",

"storage-driver": "overlay2",

"insecure-registries": ["registry.access.redhat.com","quay.io","harbor.example.com"],

"registry-mirrors": ["https://docker.mirrors.ustc.edu.cn","http://hub-mirror.c.163.com"],

"bip": "172.7.40.1/24",

"exec-opts": ["native.cgroupdriver=systemd"],

"live-restore": true

}

docker-daemon.json各配置详解

该文件作为 Docker Engine 的配置管理文件, 里面几乎涵盖了所有 docker 命令行启动可以配置的参数。

不管是在哪个平台以何种方式启动, Docker 默认都会来这里读取配置。使用户可以统一管理不同系统下的 docker daemon 配置。

相关参数的使用说明,可以参阅 man dockerd 帮助信息,或者参阅官方文档。

docker-daemon.json各配置详解

{

“api-cors-header”:"", ——————在引擎API中设置CORS标头

“authorization-plugins”:[], ——————要加载的授权插件

“bridge”:"", ————将容器附加到网桥

“cgroup-parent”:"", ——————为所有容器设置父cgroup

“cluster-store”:"", ——————分布式存储后端的URL

“cluster-store-opts”:{}, ————————设置集群存储选项(默认map [])

“cluster-advertise”:"", ————————要通告的地址或接口名称

“debug”: true, ————————启用调试模式,启用后,可以看到很多的启动信息。默认false

“default-gateway”:"", ——————容器默认网关IPv4地址

“default-gateway-v6”:"", ——————容器默认网关IPv6地址

“default-runtime”:“runc”, ————————容器的默认OCI运行时(默认为“ runc”)

“default-ulimits”:{}, ——————容器的默认ulimit(默认[])

“dns”: [“192.168.1.1”], ——————设定容器DNS的地址,在容器的 /etc/resolv.conf文件中可查看。

“dns-opts”: [], ————————容器 /etc/resolv.conf 文件,其他设置

“dns-search”: [], ————————设定容器的搜索域,当设定搜索域为 .example.com 时,在搜索一个名为 host 的 主机时,DNS不仅搜索host,还会搜

索host.example.com 。 注意:如果不设置, Docker 会默认用主机上的 /etc/resolv.conf 来配置容器。

“exec-opts”: [], ————————运行时执行选项

“exec-root”:"", ————————执行状态文件的根目录(默认为’/var/run/docker‘)

“fixed-cidr”:"", ————————固定IP的IPv4子网

“fixed-cidr-v6”:"", ————————固定IP的IPv6子网

“data-root”:"/var/lib/docker", ————-Docker运行时使用的根路径,默认/var/lib/docker

“group”: “”, ——————UNIX套接字的组(默认为“docker”)

“hosts”: [], ——————设置容器hosts

“icc”: false, ——————启用容器间通信(默认为true)

“ip”:“0.0.0.0”, ————————绑定容器端口时的默认IP(默认0.0.0.0)

“iptables”: false, ———————启用iptables规则添加(默认为true)

“ipv6”: false, ——————启用IPv6网络

“ip-forward”: false, ————————默认true, 启用 net.ipv4.ip_forward ,进入容器后使用 sysctl -a | grepnet.ipv4.ip_forward 查看

“ip-masq”:false, ——————启用IP伪装(默认为true)

“labels”:[“nodeName=node-121”], ————————docker主机的标签,很实用的功能,例如定义:–label nodeName=host-121

“live-restore”: true, ——————在容器仍在运行时启用docker的实时还原

“log-driver”:"", ——————容器日志的默认驱动程序(默认为“ json-file”)

“log-level”:"", ——————设置日志记录级别(“调试”,“信息”,“警告”,“错误”,“致命”)(默认为“信息”)

“max-concurrent-downloads”:3, ——————设置每个请求的最大并发下载量(默认为3)

“max-concurrent-uploads”:5, ——————设置每次推送的最大同时上传数(默认为5)

“mtu”: 0, ——————设置容器网络MTU

“oom-score-adjust”:-500, ——————设置守护程序的oom_score_adj(默认值为-500)

“pidfile”: “”, ——————Docker守护进程的PID文件

“raw-logs”: false, ——————全时间戳机制

“selinux-enabled”: false, ——————默认 false,启用selinux支持

“storage-driver”:"", ——————要使用的存储驱动程序

“swarm-default-advertise-addr”:"", ——————设置默认地址或群集广告地址的接口

“tls”: true, ————————默认 false, 启动TLS认证开关

“tlscacert”: “”, ——————默认 ~/.docker/ca.pem,通过CA认证过的的certificate文件路径

“tlscert”: “”, ————————默认 ~/.docker/cert.pem ,TLS的certificate文件路径

“tlskey”: “”, ————————默认~/.docker/key.pem,TLS的key文件路径

“tlsverify”: true, ————————默认false,使用TLS并做后台进程与客户端通讯的验证

“userland-proxy”:false, ——————使用userland代理进行环回流量(默认为true)

“userns-remap”:"", ————————用户名称空间的用户/组设置

“bip”:“192.168.88.0/22”, ——————————指定网桥IP

“registry-mirrors”: [“https://192.498.89.232:89”], ————————设置镜像加速

“insecure-registries”: [“120.123.122.123:12312”], ———————设置私有仓库地址可以设为http

“storage-opts”: [

“overlay2.override_kernel_check=true”,

“overlay2.size=15G”

], ————————存储驱动程序选项

“log-opts”: {

“max-file”: “3”,

“max-size”: “10m”,

}, ————————容器默认日志驱动程序选项

“iptables”: false ————————启用iptables规则添加(默认为true)

}

3、创建/data/docker目录

mkdir -p /data/docker

4、重启docker服务

systemctl restart docker

五、在Warehouse上搭建私有镜像仓库

harbor官方github地址:https://github.com/goharbor/harbor

我这边已经下载离线包:harbor-offline-installer-v2.0.6.tgz

1、将harbor包放在opt目录下

cp harbor-offline-installer-v2.0.6.tgz /opt/

2、解压harbor包

tar vxf harbor-offline-installer-v2.0.6.tgz

3、harbor目录改名

mv harbor harbor-2.0.6

4、创建harbor软链接

ln -s /opt/harbor-2.0.6/ /opt/harbor

5、复制配置文件harbor.yml

cp harbor.yml.tmpl harbor.yml

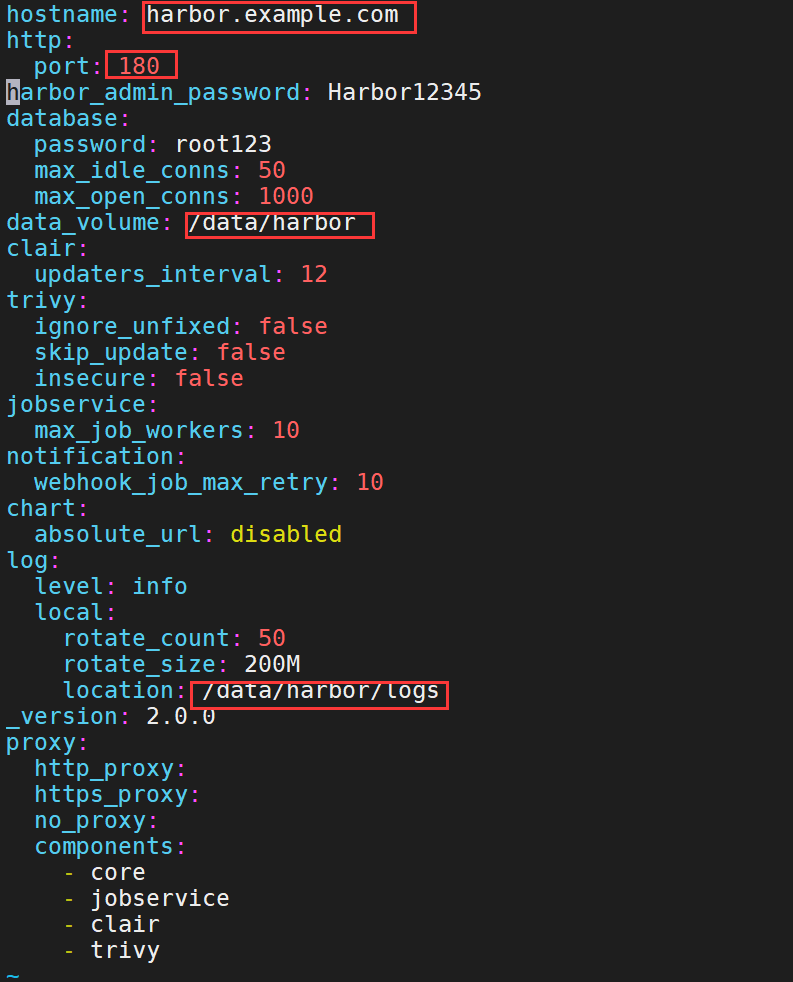

6、编辑barbor.yml配置文件

hostname: harbor.example.com

http:

port: 180

harbor_admin_password: Harbor12345

database:

password: root123

max_idle_conns: 50

max_open_conns: 1000

data_volume: /data/harbor

clair:

updaters_interval: 12

trivy:

ignore_unfixed: false

skip_update: false

insecure: false

jobservice:

max_job_workers: 10

notification:

webhook_job_max_retry: 10

chart:

absolute_url: disabled

log:

level: info

local:

rotate_count: 50

rotate_size: 200M

location: /data/harbor/logs

_version: 2.0.0

proxy:

http_proxy:

https_proxy:

no_proxy:

components:

- core

- jobservice

- clair

- trivy

7、创建安装目录

mkdir /data/harbor/logs -p

8、安装docker-compose

yum install docker-compose -y

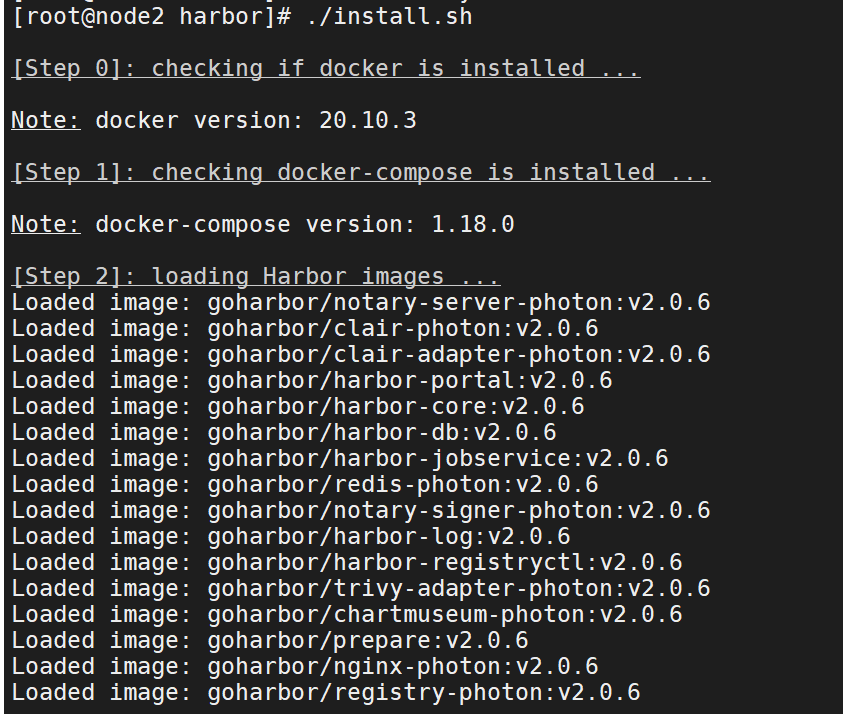

9、执行harbor的install.sh安装脚本

./install.sh

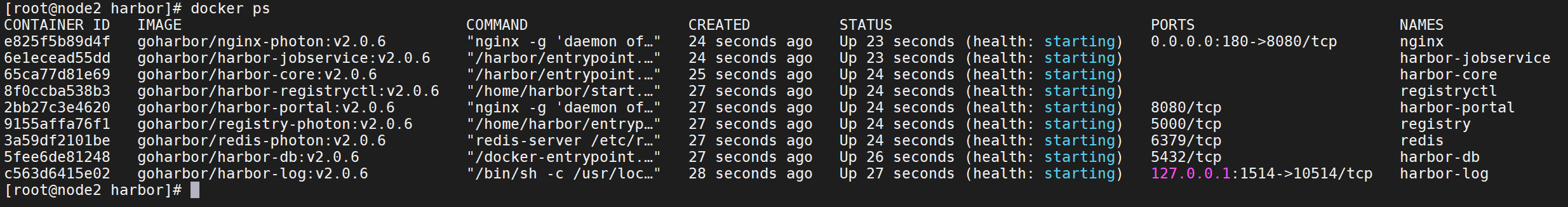

10、docker ps查看

11、安装nginx

yum install nginx -y

12、配置nginx配置文件/etc/nginx/conf.d/harbor.example.com.conf

server {

listen 80;

server_name harbor.example.com;

client_max_body_size 1000m;

location / {

proxy_pass http://127.0.0.1:180;

}

}

13、启动nginx

systemctl start nginx

systemctl enable nginx

14、配置DNS服务器,将harbor业务域加入进去

vim /var/named/example.com.zone

15、重启DNS服务器

systemctl restart named

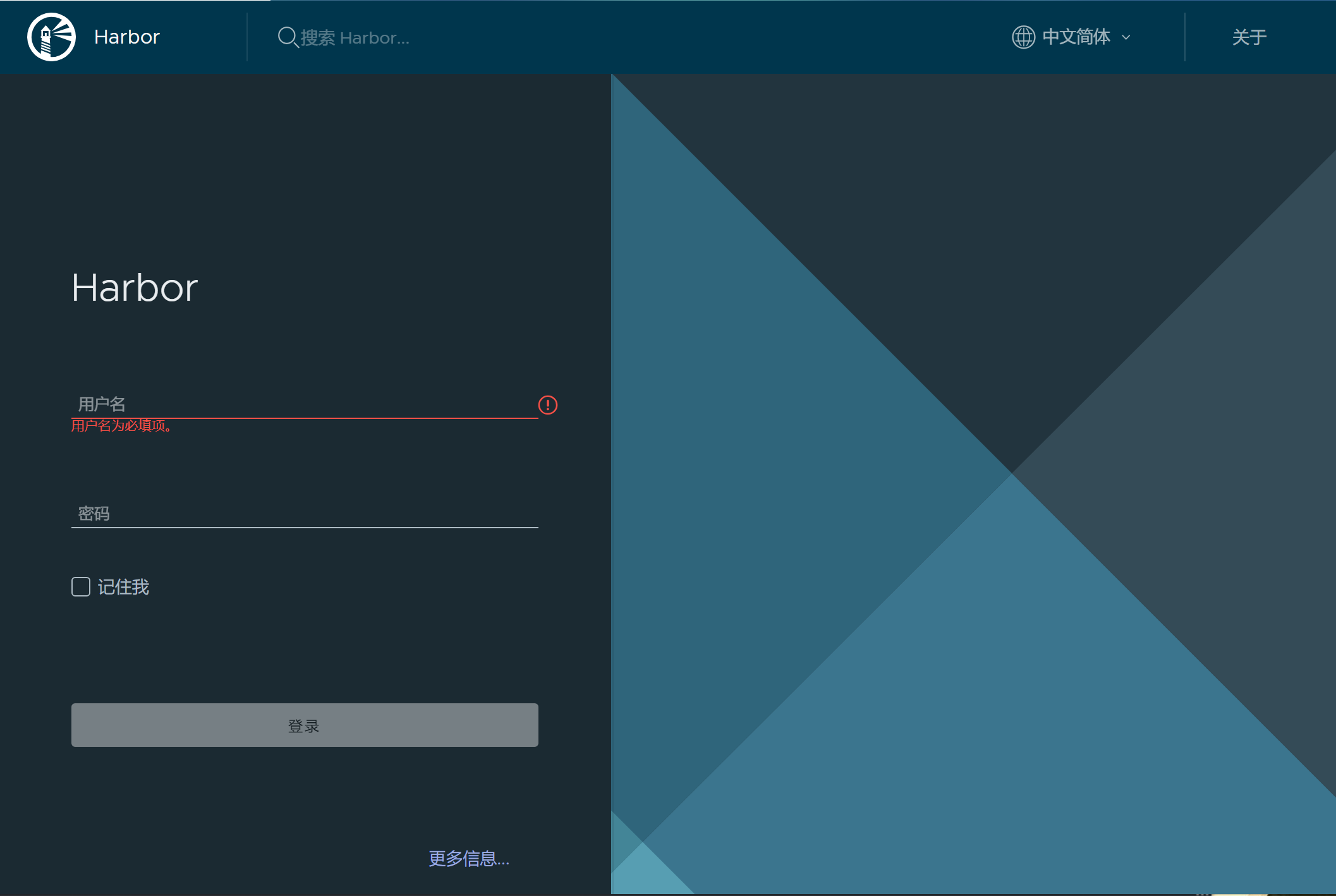

16、用浏览器打开harbor.example.com (打开网站的主机要配置对应的DNS服务器)

账号为:admin,密码:Harbor12345

密码为之前安装时的harbor.yml配置文件配置的密码。

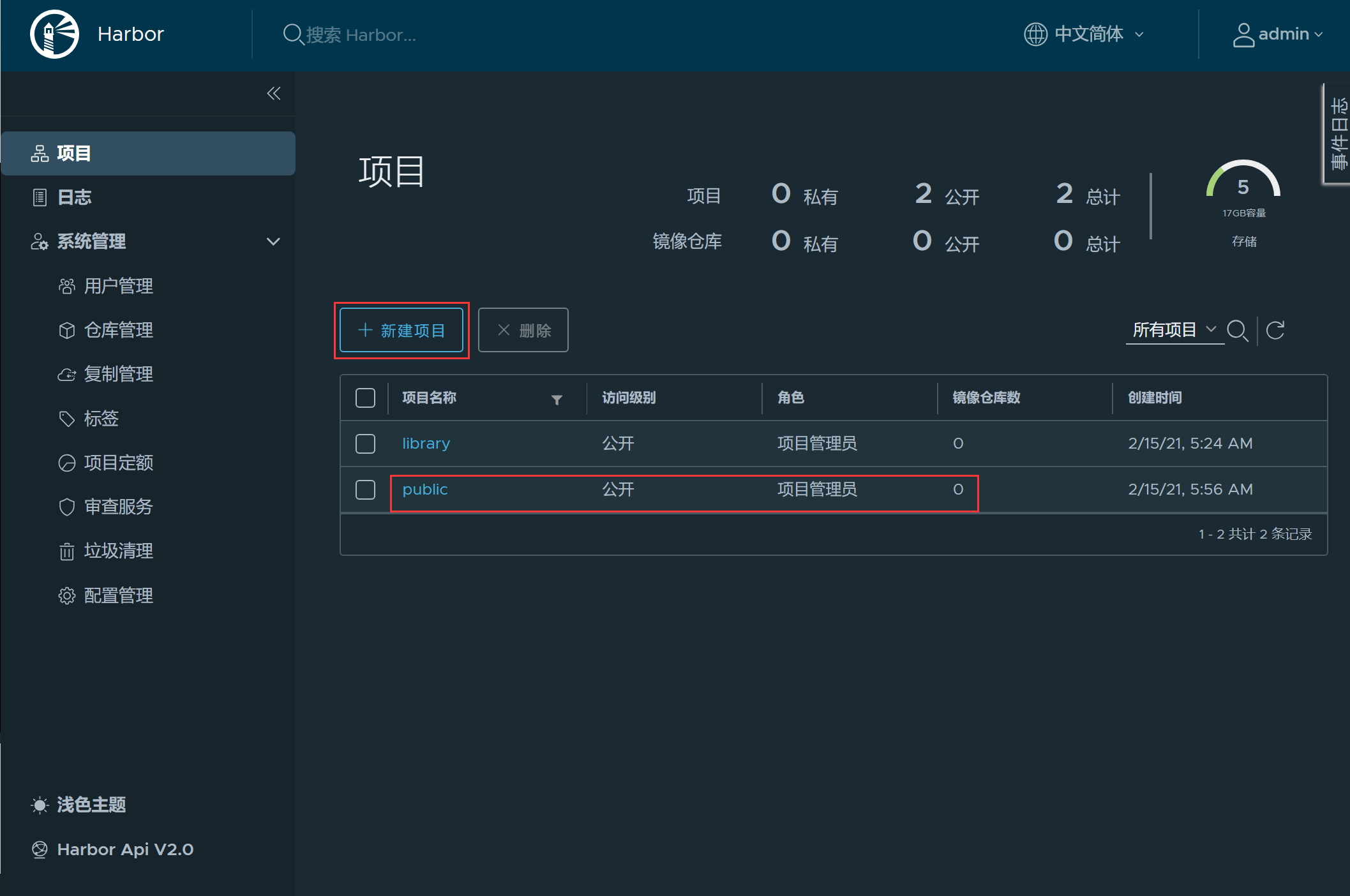

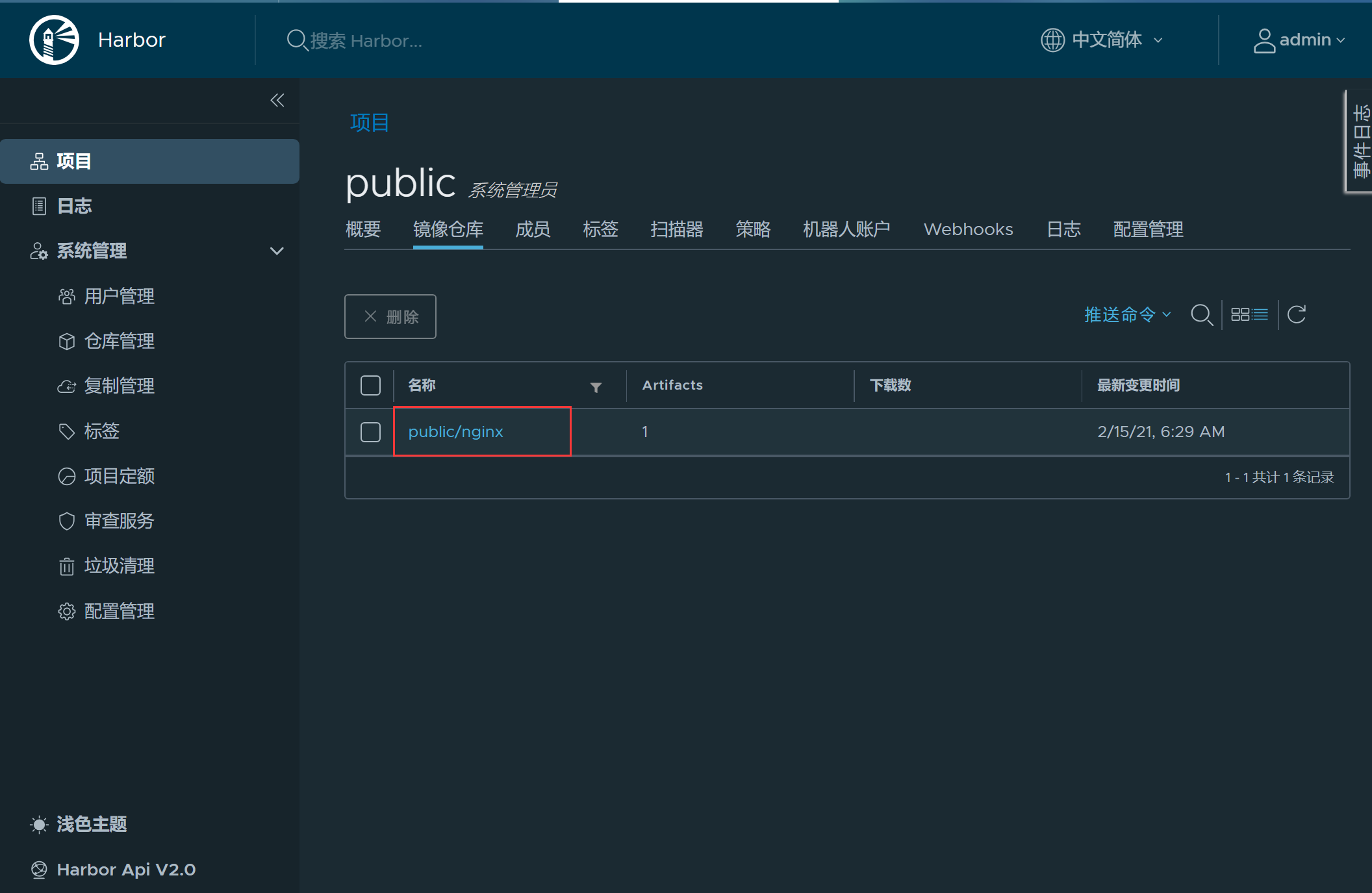

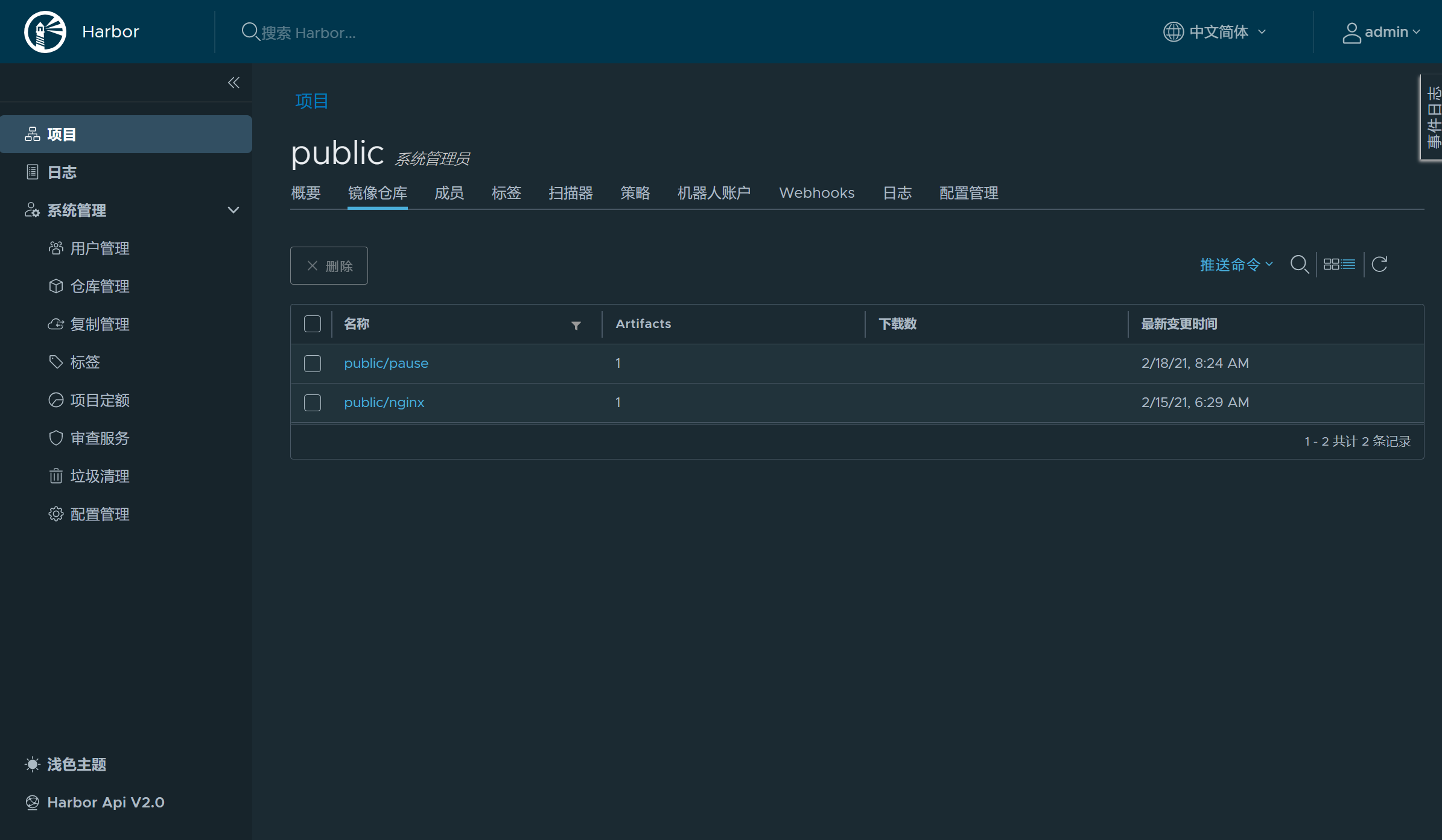

17、创建一个public项目

18、pull一个nginx镜像

docker pull nginx

19、给这个镜像打标签

docker tag f6d0b4767a6c harbor.example.com/public/nginx:latest

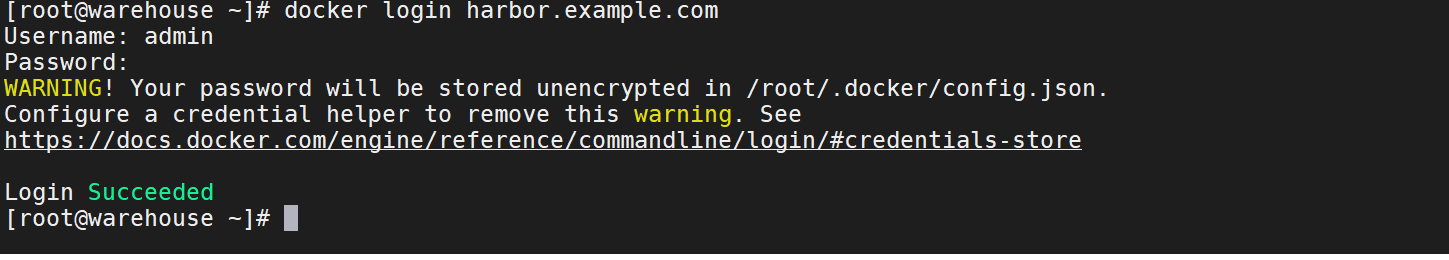

20、docker login登录harbor私有仓库

docker login harbor.example.com

21、将之前打标签的镜像传到私有仓库上

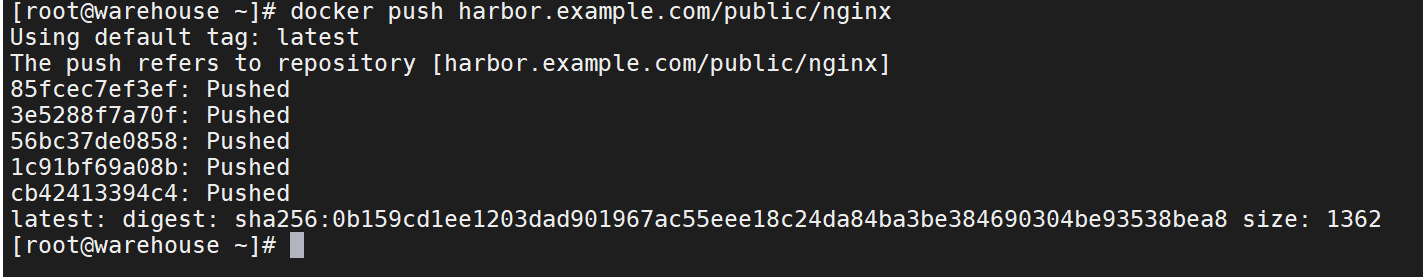

docker push harbor.example.com/public/nginx

22、在web端查看,确认是否已经上传

六、正式搭建K8S

安装etcd

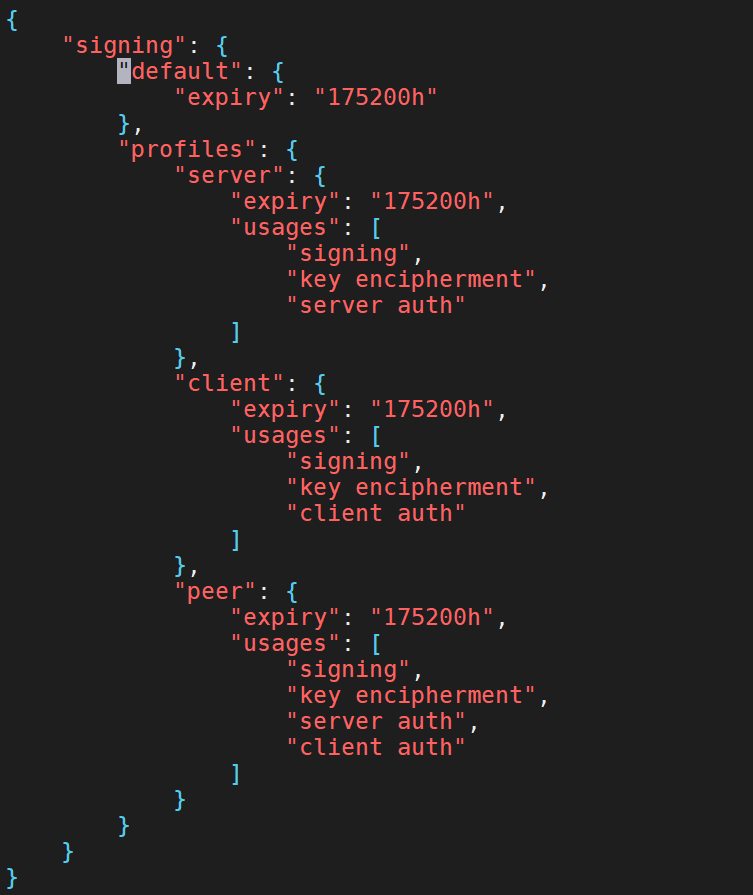

1、CFSS上配置证书配置文件,/opt/certs目录下创建以下配置文件ca-config.json

vim /opt/certs/ca-config.json

{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"server": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth"

]

},

"client": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"client auth"

]

},

"peer": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

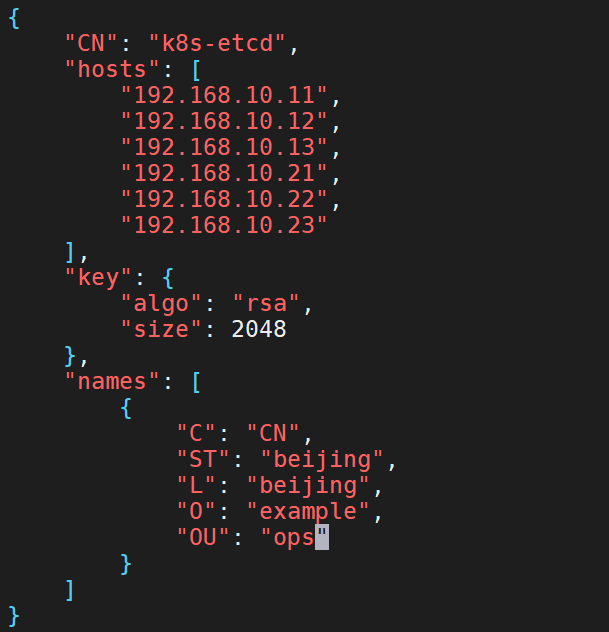

2、创建etcd证书请求文件,在/opt/certs目录下创建以下配置文件etcd-peer-csr.json

vim /opt/certs/etcd-peer-csr.json

{

"CN": "k8s-etcd",

"hosts": [

"192.168.10.11",

"192.168.10.12",

"192.168.10.13",

"192.168.10.21",

"192.168.10.22",

"192.168.10.23"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "example",

"OU": "ops"

}

]

}

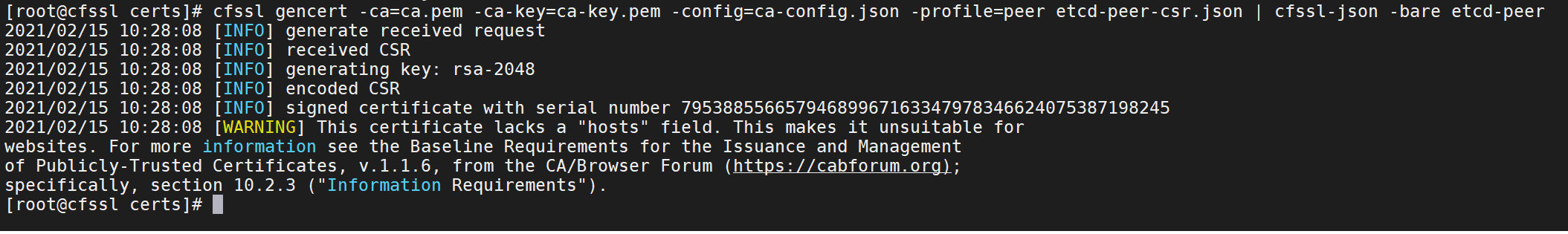

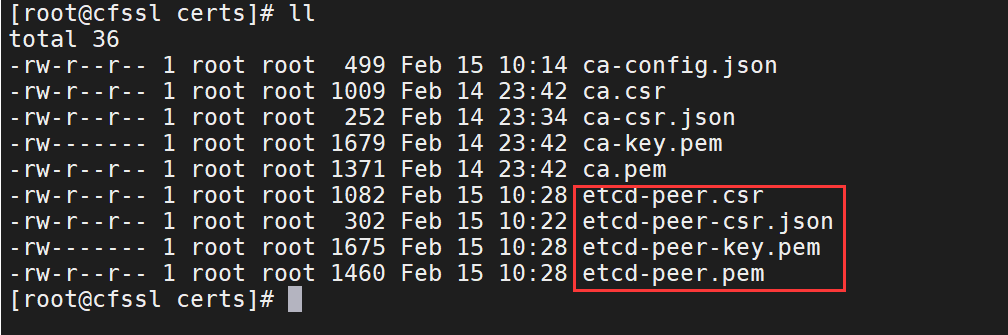

3、开始签发etcd的证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json | cfssl-json -bare etcd-peer

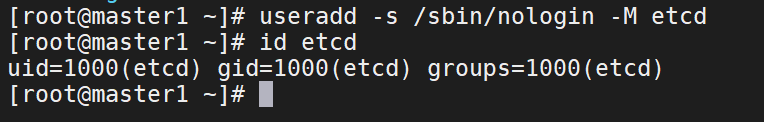

4、在master节点上创建etcd用户

useradd -s /sbin/nologin -M etcd

5、下载etcd软件

GitHub地址:https://github.com/etcd-io/etcd

我这边下载的是:etcd-v3.4.14-linux-amd64.tar.gz

6、解压、重命名、软连接

tar vxf etcd-v3.4.14-linux-amd64.tar.gz -C /opt

mv etcd-v3.4.14-linux-amd64 etcd-v3.4.14

ln -s etcd-v3.4.14 etcd

7、创建etcd目录及证书目录

mkdir -p /opt/etcd/certs /data/etcd /data/logs/etcd-server

chown -R etcd.etcd /data/logs/etcd-server/

8、将CFSS证书服务器上的证书和私钥复制到/opt/etcd/certs目录

ca.pem,etcd-peer-key.pem,etcd-peer.pem

9、配置etcd 的启动脚本etcd-server-startup.sh

10-21服务器启动脚本

#!/bin/sh

./etcd --name etcd-server-10-21 \

--data-dir /data/etcd/etcd-server \

--listen-peer-urls https://192.168.10.21:2380 \

--listen-client-urls https://192.168.10.21:2379,http://127.0.0.1:2379 \

--quota-backend-bytes 8000000000 \

--initial-advertise-peer-urls https://192.168.10.21:2380 \

--advertise-client-urls https://192.168.10.21:2379,http://127.0.0.1:2379 \

--initial-cluster etcd-server-10-21=https://192.168.10.21:2380,etcd-server-10-22=https://192.168.10.22:2380,etcd-server-10-23=https://192.168.10.23:2380 \

--cert-file ./certs/etcd-peer.pem \

--key-file ./certs/etcd-peer-key.pem \

--client-cert-auth \

--trusted-ca-file ./certs/ca.pem \

--peer-cert-file ./certs/etcd-peer.pem \

--peer-key-file ./certs/etcd-peer-key.pem \

--peer-client-cert-auth \

--peer-trusted-ca-file ./certs/ca.pem \

--log-output stdout

10-22服务器配置文件

#!/bin/sh

./etcd --name etcd-server-10-22 \

--data-dir /data/etcd/etcd-server \

--listen-peer-urls https://192.168.10.22:2380 \

--listen-client-urls https://192.168.10.22:2379,http://127.0.0.1:2379 \

--quota-backend-bytes 8000000000 \

--initial-advertise-peer-urls https://192.168.10.22:2380 \

--advertise-client-urls https://192.168.10.22:2379,http://127.0.0.1:2379 \

--initial-cluster etcd-server-10-21=https://192.168.10.21:2380,etcd-server-10-22=https://192.168.10.22:2380,etcd-server-10-23=https://192.168.10.23:2380 \

--cert-file ./certs/etcd-peer.pem \

--key-file ./certs/etcd-peer-key.pem \

--client-cert-auth \

--trusted-ca-file ./certs/ca.pem \

--peer-cert-file ./certs/etcd-peer.pem \

--peer-key-file ./certs/etcd-peer-key.pem \

--peer-client-cert-auth \

--peer-trusted-ca-file ./certs/ca.pem \

--log-output stdout

10-23服务器配置文件

#!/bin/sh

./etcd --name etcd-server-10-23 \

--data-dir /data/etcd/etcd-server \

--listen-peer-urls https://192.168.10.23:2380 \

--listen-client-urls https://192.168.10.23:2379,http://127.0.0.1:2379 \

--quota-backend-bytes 8000000000 \

--initial-advertise-peer-urls https://192.168.10.23:2380 \

--advertise-client-urls https://192.168.10.23:2379,http://127.0.0.1:2379 \

--initial-cluster etcd-server-10-21=https://192.168.10.21:2380,etcd-server-10-22=https://192.168.10.22:2380,etcd-server-10-23=https://192.168.10.23:2380 \

--cert-file ./certs/etcd-peer.pem \

--key-file ./certs/etcd-peer-key.pem \

--client-cert-auth \

--trusted-ca-file ./certs/ca.pem \

--peer-cert-file ./certs/etcd-peer.pem \

--peer-key-file ./certs/etcd-peer-key.pem \

--peer-client-cert-auth \

--peer-trusted-ca-file ./certs/ca.pem \

--log-output stdout

配置文件的不同处:

--name

--listen-peer-urls

--listen-client-urls

--initial-advertise-peer-urls

--advertise-client-urls

10、配置属主属组和启动脚本执行权限

chown -R etcd.etcd /opt/etcd-v3.4.14/

chown -R etcd.etcd /data/etcd

chmod +x /opt/etcd/etcd-server-startup.sh

11、安装supervisor管理启动脚本

yum install supervisor -y

systemctl start supervisord

systemctl enable supervisord

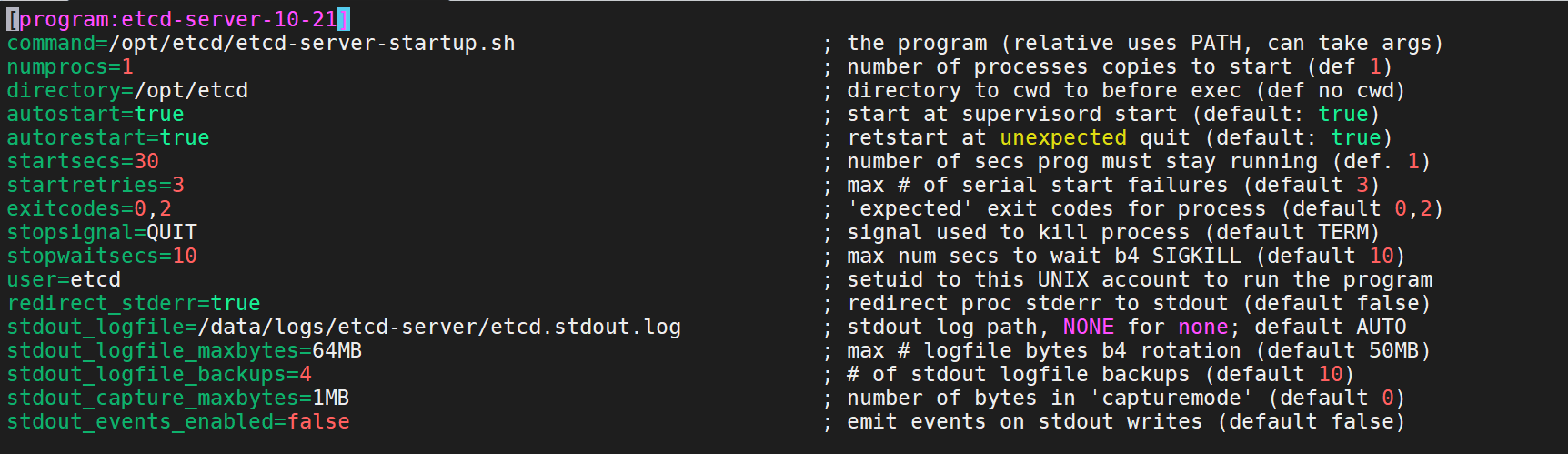

12、配置的etcd supervisor的ini配置文件/etc/supervisord.d/etcd-server.ini

[program:etcd-server-10-21]

command=/opt/etcd/etcd-server-startup.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/etcd ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=etcd ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

13、启动etcd服务并检查

supervisorctl update

supervisorctl status

14、启动或者关闭etcd服务

supervisorctl start etcd-server-10-21

supervisorctl stop etcd-server-10-21

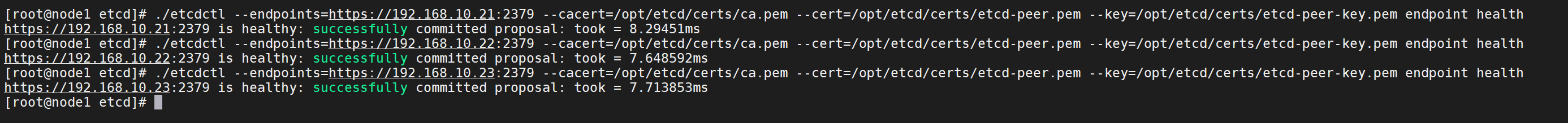

15、检查etcd是否健康

./etcdctl --endpoints=https://192.168.10.21:2379 --cacert=/opt/etcd/certs/ca.pem --cert=/opt/etcd/certs/etcd-peer.pem --key=/opt/etcd/certs/etcd-peer-key.pem endpoint health

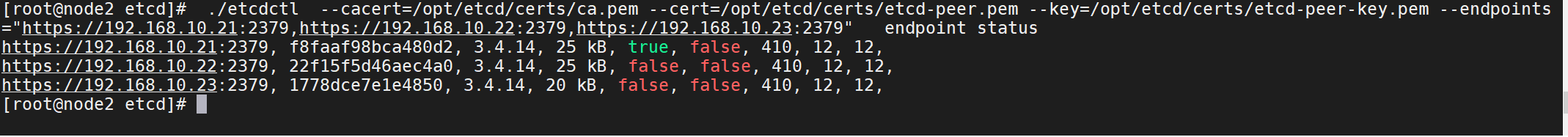

16、查看当前leader

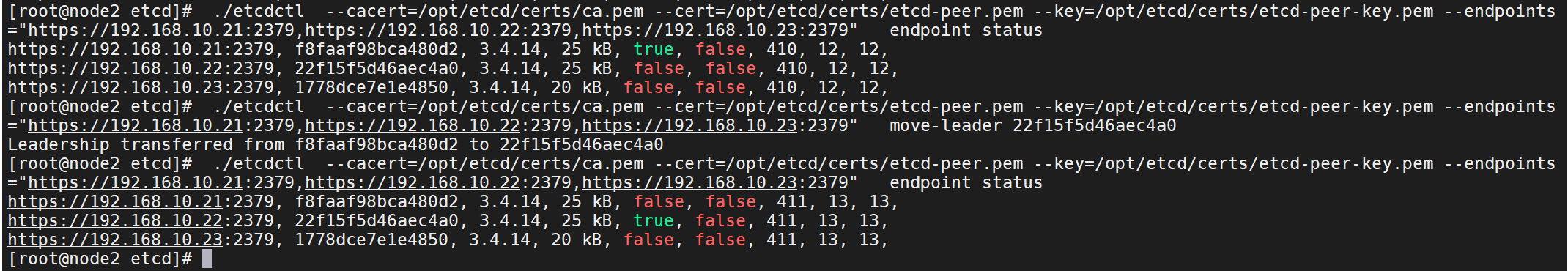

./etcdctl --cacert=/opt/etcd/certs/ca.pem --cert=/opt/etcd/certs/etcd-peer.pem --key=/opt/etcd/certs/etcd-peer-key.pem --endpoints="https://192.168.10.21:2379,https://192.168.10.22:2379,https://192.168.10.23:2379" endpoint status

17、若想移交leader

./etcdctl --cacert=/opt/etcd/certs/ca.pem --cert=/opt/etcd/certs/etcd-peer.pem --key=/opt/etcd/certs/etcd-peer-key.pem --endpoints="https://192.168.10.21:2379,https://192.168.10.22:2379,https://192.168.10.23:2379" move-leader 22f15f5d46aec4a0

./etcdctl --cacert=/opt/etcd/certs/ca.pem --cert=/opt/etcd/certs/etcd-peer.pem --key=/opt/etcd/certs/etcd-peer-key.pem --endpoints="https://192.168.10.21:2379,https://192.168.10.22:2379,https://192.168.10.23:2379" endpoint status

安装apiserver

GIT官方地址:https://github.com/kubernetes/kubernetes

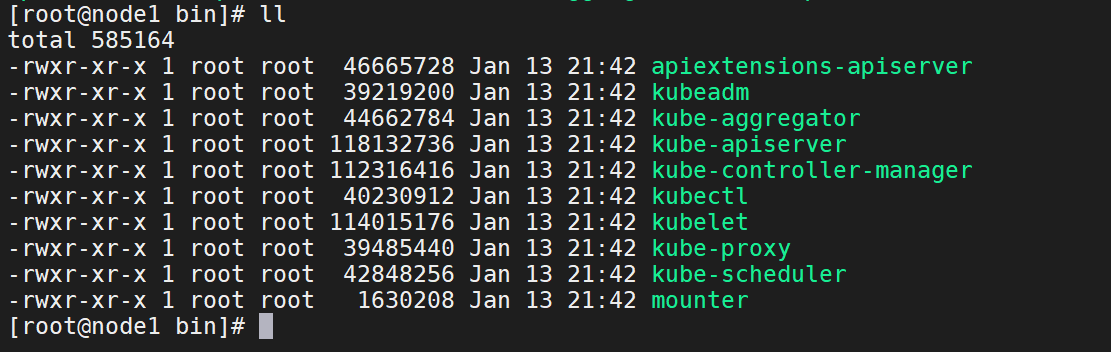

我这边下载的是 kubernetes-server-linux-amd64.tar.gz,这边我用的版本是1.15.12

1、解压kubernetes

tar -vxf kubernetes-server-linux-amd64.tar.gz - C /opt

2、查看解压后server目录下的文件

3、创建certs、conf目录

mkdir /opt/kubernetes/server/bin/certs /opt/kubernetes/server/bin/conf

4、生成证书csr的json配置文件

vim /opt/certs/client-csr.json

{

"CN": "k8s-node",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "example",

"OU": "ops"

}

]

}

5、生成client证书文件

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json |cfssl-json -bare client

6、配置kube-apiserver证书csr的json配置文件

vi /opt/certs/apiserver-csr.json

{

"CN": "k8s-apiserver",

"hosts": [

"127.0.0.1",

"192.168.0.1",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"sevattal-11.host.com",

"sevattal-12.host.com",

"sevattal-13.host.com",

"sevattal-21.host.com",

"sevattal-22.host.com",

"sevattal-23.host.com",

"192.168.10.10",

"192.168.10.11",

"192.168.10.12",

"192.168.10.13",

"192.168.10.21",

"192.168.10.22",

"192.168.10.23"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "example",

"OU": "ops"

}

]

}

7、生成kube-apiserver证书文件

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json |cfssl-json -bare apiserver

8、拷贝证书文件到/opt/kubernetes/server/bin/certs目录下

apiserver.pem apiserver-key.pem client.pem client-key.pem ca.pem ca-key.pem

9、创建apiserver配置文件 /opt/kubernetes/server/bin/conf/audit.yaml

apiVersion: audit.k8s.io/v1beta1

kind: Policy

omitStages:

- "RequestReceived"

rules:

- level: RequestResponse

resources:

- group: ""

resources: ["pods"]

- level: Metadata

resources:

- group: ""

resources: ["pods/log", "pods/status"]

- level: None

resources:

- group: ""

resources: ["configmaps"]

resourceNames: ["controller-leader"]

- level: None

users: ["system:kube-proxy"]

verbs: ["watch"]

resources:

- group: ""

resources: ["endpoints", "services"]

- level: None

userGroups: ["system:authenticated"]

nonResourceURLs:

- "/api*"

- "/version"

- level: Request

resources:

- group: ""

resources: ["configmaps"]

namespaces: ["kube-system"]

- level: Metadata

resources:

- group: ""

resources: ["secrets", "configmaps"]

- level: Request

resources:

- group: ""

- group: "extensions"

- level: Metadata

omitStages:

- "RequestReceived"

10、api启动脚本文件kube-apiserver.sh

#!/bin/bash

./kube-apiserver \

--apiserver-count 2 \

--audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log \

--audit-policy-file ./conf/audit.yaml \

--authorization-mode RBAC \

--client-ca-file ./certs/ca.pem \

--requestheader-client-ca-file ./certs/ca.pem \

--enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota \

--etcd-cafile ./certs/ca.pem \

--etcd-certfile ./certs/client.pem \

--etcd-keyfile ./certs/client-key.pem \

--etcd-servers https://192.168.10.21:2379,https://192.168.10.22:2379,https://192.168.10.23:2379 \

--service-account-key-file ./certs/ca-key.pem \

--service-cluster-ip-range 10.10.0.0/16 \

--service-node-port-range 3000-29999 \

--target-ram-mb=1024 \

--kubelet-client-certificate ./certs/client.pem \

--kubelet-client-key ./certs/client-key.pem \

--log-dir /data/logs/kubernetes/kube-apiserver \

--tls-cert-file ./certs/apiserver.pem \

--tls-private-key-file ./certs/apiserver-key.pem \

--v 2 \

11、给kube-apiserver.sh 添加可执行权限,即执行目录

chmod +x kube-apiserver.sh

mkdir -p /data/logs/kubernetes/kube-apiserver

12、配置supervisord配置文件/etc/supervisord.d/kube-apiserver.ini

[program:kube-apiserver-10-21]

command=/opt/kubernetes/server/bin/kube-apiserver.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

13、启动apiserver服务

supervisorctl update

附:

若启动时报出如下错误,请查看网卡网关是否有配置,若没有配置请配置网卡网关并重启网卡。

Error: Unable to find suitable network address.error='no default routes found in "/proc/net/route" or "/proc/net/ipv6_route"'. Try to set the AdvertiseAddress directly or provide a valid BindAddress to fix this.

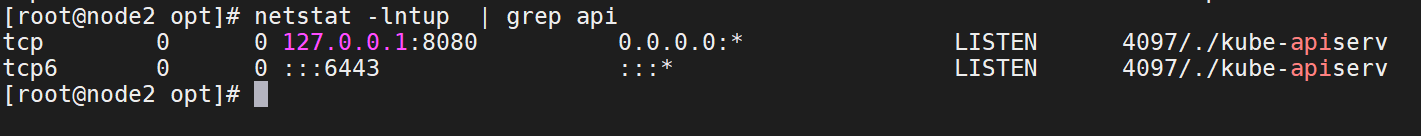

14、查看端口

netstat -lntup | grep api

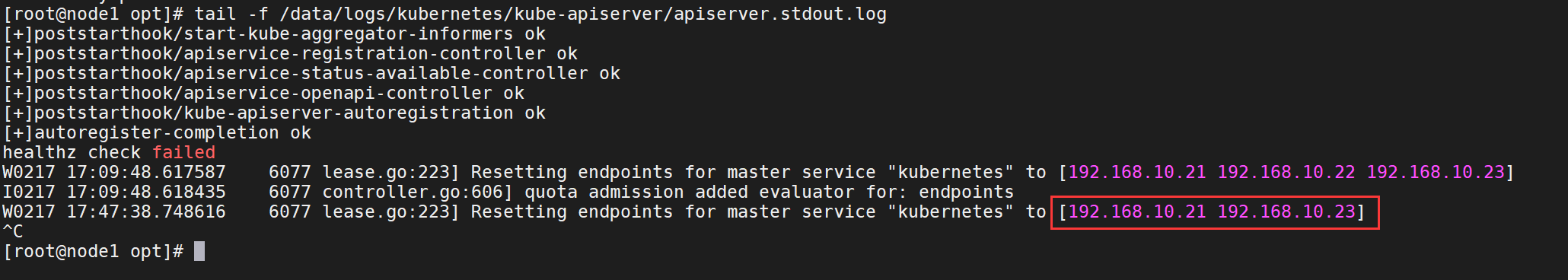

15、查看日志可以看到当前的master

tail -f /data/logs/kubernetes/kube-apiserver/apiserver.stdout.log

Master部署四层反向代理

集群架构

| 主机名 | 角色 | IP地址 | VIP地址 |

|---|---|---|---|

| master1.example.com | L4 | 192.168.10.11 | 192.168.10.10 |

| master2.example.com | L4 | 192.168.10.12 | 192.168.10.10 |

| master3.example.com | L4 | 192.168.10.13 | 192.168.10.10 |

用nginx的四层反向代理加上keepalived高可用,代理后端的apiserver 6443端口为前端的 7443端口。

1、安装nginx和keepalived

yum install nginx keepalived -y

2、修改nginx配置文件/etc/nginx/nginx.conf

stream {

upstream kube-apiserver {

server 192.168.10.21:6443 max_fails=3 fail_timeout=30s;

server 192.168.10.22:6443 max_fails=3 fail_timeout=30s;

server 192.168.10.23:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 7443;

proxy_connect_timeout 2s;

proxy_timeout 900s;

proxy_pass kube-apiserver;

}

}

3、启动nginx

nginx -t

systemctl start nginx

systemctl enable nginx

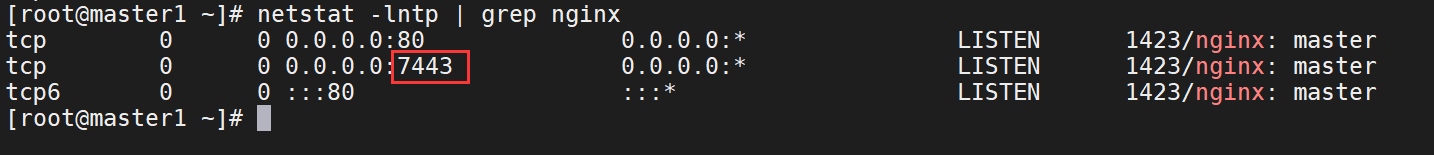

4、查看nginx的7443端口是否已经起来

netstat -lntp | grep nginx

5、配置/etc/keepalived/check_port.sh 监控端口脚本

#!/bin/bash

#keepalived 监控端口脚本

#使用方法:

#在keepalived的配置文件中

#vrrp_script check_port

CHK_PORT=$1

if [ -n "$CHK_PORT" ];then

PORT_PROCESS=`ss -lnt|grep $CHK_PORT|wc -l`

if [ $PORT_PROCESS -eq 0 ];then

echo "Port $CHK_PORT Is Not Used,End."

exit 1

fi

else

echo "Check Port Cant Be Empty!"

fi

6、监听端口脚本添加执行权限

chmod +x /etc/keepalived/check_port.sh

7、配置keepalived 配置文件etc/keepalived/keepalived.conf

10-11节点 (主节点)

! Configuration File for keepalived

global_defs {

router_id 192.168.10.11

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 251

mcast_src_ip 192.168.10.11

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.10.10

}

}

10-12节点

! Configuration File for keepalived

global_defs {

router_id 192.168.10.12

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 251

mcast_src_ip 192.168.10.12

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.10.10

}

}

10-13节点

! Configuration File for keepalived

global_defs {

router_id 192.168.10.13

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 251

mcast_src_ip 192.168.10.13

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.10.10

}

}

8、启动keepalived

systemctl start keepalived

systemctl enable keepalived

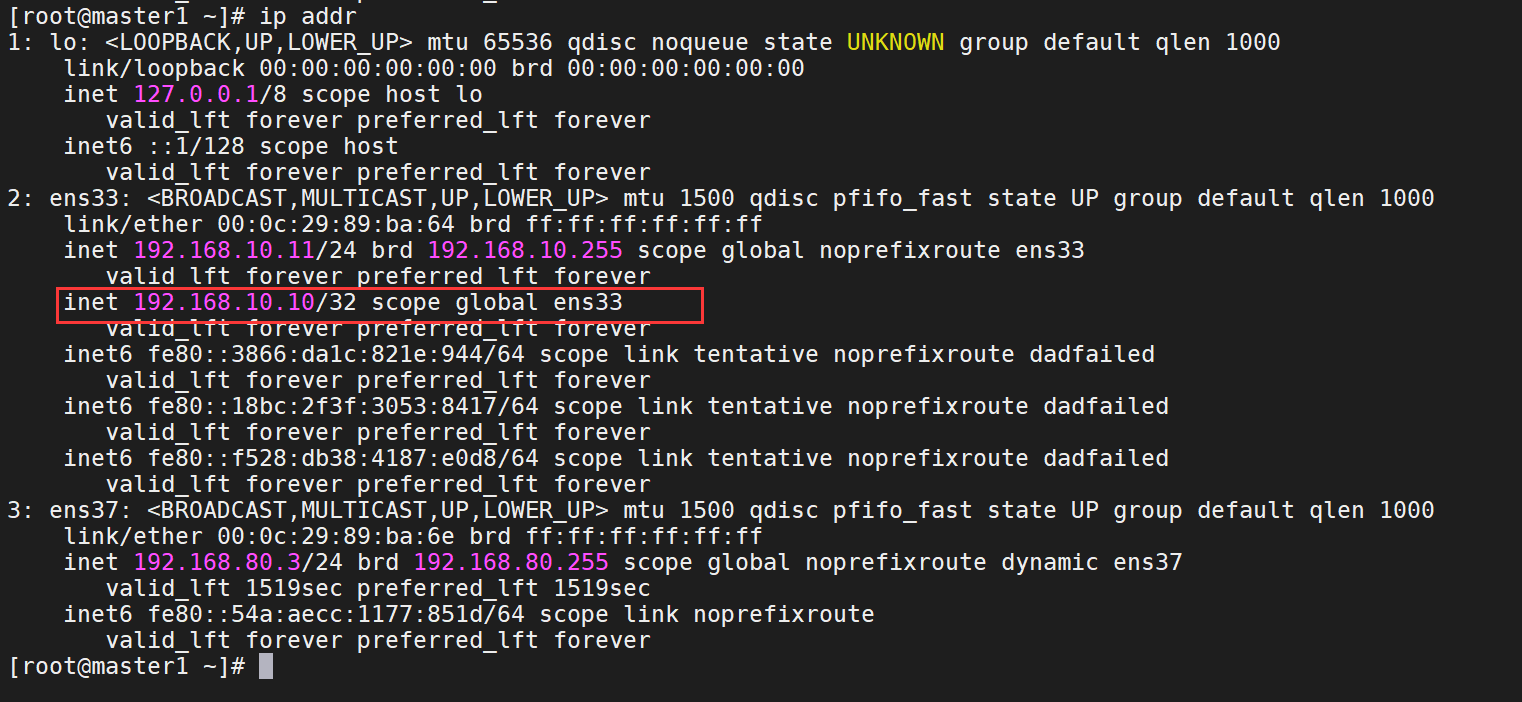

9、查看虚拟网卡是否已经起来

ip addr

注意:

keepalived 主从绑定的时候要和自己的网络名称保持一致: interface ens33

查看日志排错:tail -fn 200 /var/log/messages

生产上vip不能轻易来回飘(故障转移)

nginx -s stop

netstat -nltp| grep 7443

ip addr

Node部署kube-controller-manager

集群架构

| 主机名 | 角色 | IP地址 |

|---|---|---|

| node1.example.com | controller-manager | 192.168.10.21 |

| node2.example.com | controller-manager | 192.168.10.22 |

| node3.example.com | controller-manager | 192.168.10.23 |

1、创建启动脚本/opt/kubernetes/server/bin/kube-controller-manager.sh

vim /opt/kubernetes/server/bin/kube-controller-manager.sh

#!/bin/sh

./kube-controller-manager \

--cluster-cidr 172.7.0.0/16 \

--leader-elect true \

--log-dir /data/logs/kubernetes/kube-controller-manager \

--master http://127.0.0.1:8080 \

--service-account-private-key-file ./certs/ca-key.pem \

--service-cluster-ip-range 10.10.0.0/16 \

--root-ca-file ./certs/ca.pem \

--v 2

2、授权文件权限、创建目录

chmod +x /opt/kubernetes/server/bin/kube-controller-manager.sh

mkdir -p /data/logs/kubernetes/kube-controller-manager

3、创建supervisor配置/etc/supervisord.d/kube-conntroller-manager.ini

vim /etc/supervisord.d/kube-conntroller-manager.ini

[program:kube-controller-manager-10-21]

command=/opt/kubernetes/server/bin/kube-controller-manager.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controller.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

4、检查并启动服务

supervisorctl update

supervisorctl status

部署kube-scheduler

集群架构

| 主机名 | 角色 | IP地址 |

|---|---|---|

| node1.example.com | kube-scheduler | 192.168.10.21 |

| node2.example.com | kube-scheduler | 192.168.10.22 |

| node3.example.com | kube-scheduler | 192.168.10.23 |

1、创建kube-scheduler启动脚本

vi /opt/kubernetes/server/bin/kube-scheduler.sh

#!/bin/sh

./kube-scheduler \

--leader-elect \

--log-dir /data/logs/kubernetes/kube-scheduler \

--master http://127.0.0.1:8080 \

--v 2

2、授权文件权限、创建目录

chmod +x /opt/kubernetes/server/bin/kube-scheduler.sh

mkdir -p /data/logs/kubernetes/kube-scheduler

3、创建supervisor配置/etc/supervisord.d/kube-scheduler.ini

vi /etc/supervisord.d/kube-scheduler.ini

[program:kube-scheduler-10-21]

command=/opt/kubernetes/server/bin/kube-scheduler.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

4、检查并启动服务

supervisorctl update

supervisorctl status

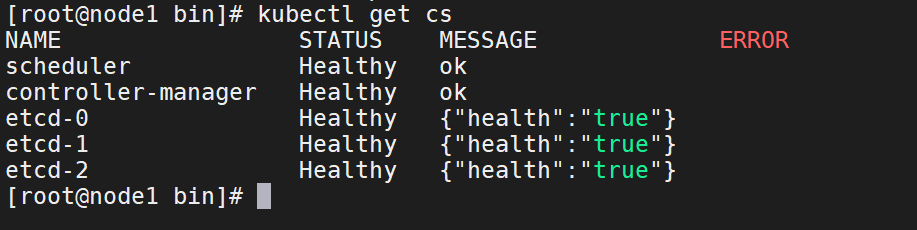

检查节点kube

1、将kubectl命令软链接到/usr/bin下

ln -s /opt/kubernetes/server/bin/kubectl /usr/bin/kubectl

2、检查节点

kubectl get cs

部署kubelet

集群架构

| 主机名 | 角色 | IP地址 |

|---|---|---|

| node1.example.com | kubelet | 192.168.10.21 |

| node2.example.com | kubelet | 192.168.10.22 |

| node3.example.com | kubelet | 192.168.10.23 |

1、创建生成证书csr的json配置文件kubelet-csr.json

vi kubelet-csr.json

{

"CN": "k8s-kubelet",

"hosts": [

"127.0.0.1",

"192.168.10.10",

"192.168.10.11",

"192.168.10.12",

"192.168.10.13",

"192.168.10.21",

"192.168.10.22",

"192.168.10.23"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "example",

"OU": "ops"

}

]

}

2、生成kubelet证书文件

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssl-json -bare kubelet

3、拷贝证书至各个节点的/opt/kubernetes/server/bin/certs目录下

kubelet-key.pem kubelet.pem

4、创建配置

set-cluster

kubectl config set-cluster myk8s \--certificate-authority=/opt/kubernetes/server/bin/certs/ca.pem \--embed-certs=true \--server=https://192.168.10.10:7443 \--kubeconfig=/opt/kubernetes/server/bin/conf/kubelet.kubeconfig

set-credentials

kubectl config set-credentials k8s-node \--client-certificate=/opt/kubernetes/server/bin/certs/client.pem \--client-key=/opt/kubernetes/server/bin/certs/client-key.pem \--embed-certs=true \--kubeconfig=/opt/kubernetes/server/bin/conf/kubelet.kubeconfig

set-context

kubectl config set-context myk8s-context \--cluster=myk8s \--user=k8s-node \--kubeconfig=/opt/kubernetes/server/bin/conf/kubelet.kubeconfig

use-context

kubectl config use-context myk8s-context --kubeconfig=/opt/kubernetes/server/bin/conf/kubelet.kubeconfig

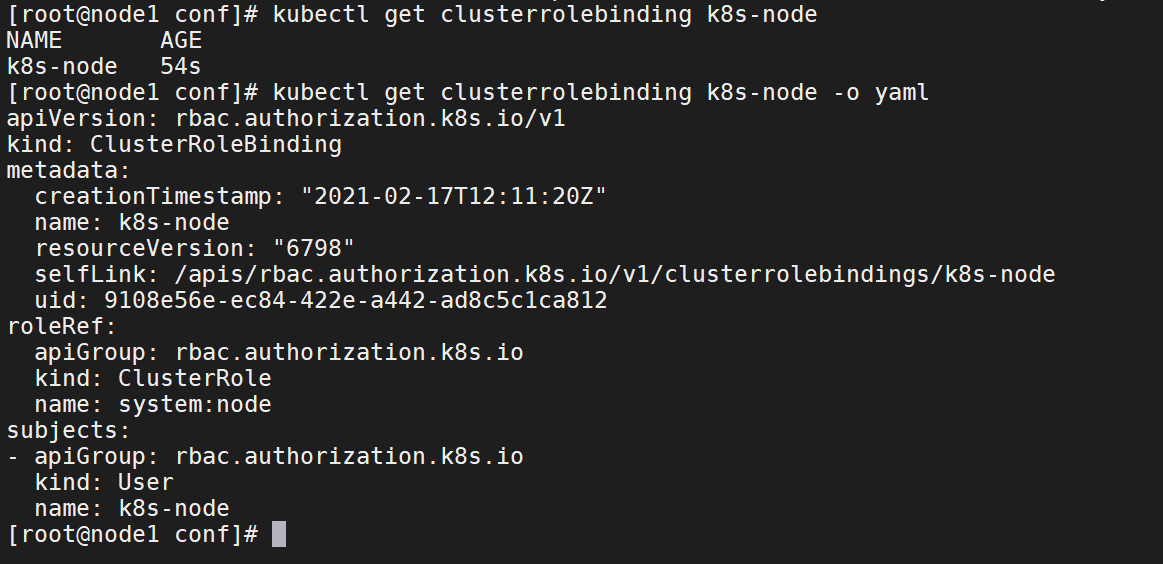

k8s-node.yaml (/opt/kubernetes/server/bin/conf/k8s-node.yaml)

vi /opt/kubernetes/server/bin/conf/k8s-node.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: k8s-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: k8s-node

应用资源配置

kubectl create -f /opt/kubernetes/server/bin/conf/k8s-node.yaml

查看集群角色和角色属性

kubectl get clusterrolebinding k8s-nodekubectl get clusterrolebinding k8s-node -o yaml

5、复制kubelet.kubeconfig文件至其他节点的/opt/kubernetes/server/bin/conf/目录下

kubelet.kubeconfig

6、在私有镜像仓库节点获取pause基础镜像

登录私有镜像源

docker login harbor.example.com

拉取pause镜像源

docker pull kubernetes/pause

查看pause镜像

docker images | grep pause

给puase镜像打标签

docker tag f9d5de079539 harbor.example.com/public/pause:latest

将puase镜像推到私有镜像源harbor中

docker push harbor.example.com/public/pause:latest

查看harbor网页端

7、创建kubelet启动脚本

vi /opt/kubernetes/server/bin/kubelet.sh

#!/bin/sh

./kubelet \

--anonymous-auth=false \

--cgroup-driver systemd \

--cluster-dns 10.10.0.2 \

--cluster-domain cluster.local \

--runtime-cgroups=/systemd/system.slice \

--kubelet-cgroups=/systemd/system.slice \

--fail-swap-on="false" \

--client-ca-file ./certs/ca.pem \

--tls-cert-file ./certs/kubelet.pem \

--tls-private-key-file ./certs/kubelet-key.pem \

--hostname-override sevattal-21.host.com \

--image-gc-high-threshold 20 \

--image-gc-low-threshold 10 \

--kubeconfig ./conf/kubelet.kubeconfig \

--log-dir /data/logs/kubernetes/kube-kubelet \

--pod-infra-container-image harbor.example.com/public/pause:latest \

--root-dir /data/kubelet

8、授予可执行权限和创建目录

chmod +x /opt/kubernetes/server/bin/kubelet.sh

mkdir -p /data/logs/kubernetes/kube-kubelet /data/kubelet

9、创建supervisor配置文件

vi /etc/supervisord.d/kube-kubelet.ini

[program:kube-kubelet-10-21]

command=/opt/kubernetes/server/bin/kubelet.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

10、启动服务并检查

supervisorctl update

supervisorctl status

附:

failed to run Kubelet: failed to create kubelet: failed to get docker versionr/run/docker.sock. Is the docker daemon running?

若kubelet启动时报错以上信息,请启动docker

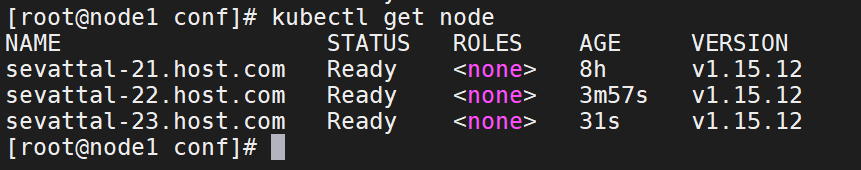

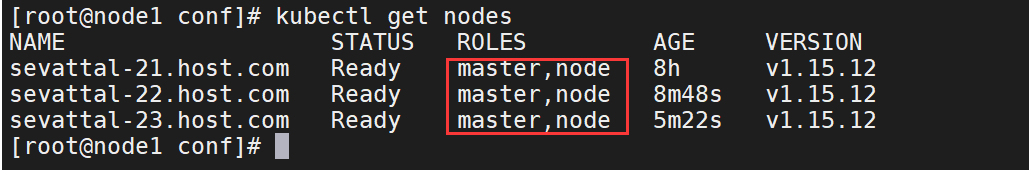

11、当所有节点启动后,使用kubectl get nodes获取节点信息

kubectl get node

12、给所有节点打上标签

kubectl label node sevattal-21.host.com node-role.kubernetes.io/master=sevattal-21.host.com

kubectl label node sevattal-21.host.com node-role.kubernetes.io/node=sevattal-21.host.com

kubectl label node sevattal-22.host.com node-role.kubernetes.io/master=sevattal-22.host.com

kubectl label node sevattal-22.host.com node-role.kubernetes.io/node=sevattal-22.host.com

kubectl label node sevattal-23.host.com node-role.kubernetes.io/master=sevattal-23.host.com

kubectl label node sevattal-23.host.com node-role.kubernetes.io/node=sevattal-23.host.com

部署kube-proxy

集群规划

| 主机名 | 角色 | IP地址 |

|---|---|---|

| node1.example.com | kube-proxy | 192.168.10.21 |

| node2.example.com | kube-proxy | 192.168.10.22 |

| node3.example.com | kube-proxy | 192.168.10.23 |

1、创建生成证书签名csr的json配置文件

vi kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "example",

"OU": "ops"

}

]

}

2、生成kube-proxy证书文件

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json |cfssl-json -bare kube-proxy-client

3、将证书文件发送至各个节点/opt/kubernetes/server/bin/certs目录下

kube-proxy-client.pem kube-proxy-client-key.pem

4、创建配置

set-cluster

kubectl config set-cluster myk8s \--certificate-authority=/opt/kubernetes/server/bin/certs/ca.pem \--embed-certs=true \--server=https://192.168.10.10:7443 \--kubeconfig=/opt/kubernetes/server/bin/conf/kube-proxy.kubeconfig

set-credentials

kubectl config set-credentials kube-proxy \ --client-certificate=/opt/kubernetes/server/bin/certs/kube-proxy-client.pem \ --client-key=/opt/kubernetes/server/bin/certs/kube-proxy-client-key.pem \ --embed-certs=true \ --kubeconfig=/opt/kubernetes/server/bin/conf/kube-proxy.kubeconfig

set-context

kubectl config set-context myk8s-context \--cluster=myk8s \--user=kube-proxy \--kubeconfig=/opt/kubernetes/server/bin/conf/kube-proxy.kubeconfig

use-context

kubectl config use-context myk8s-context --kubeconfig=kube-proxy.kubeconfig

5、复制kube-proxy.kubeconfig文件至其他节点的/opt/kubernetes/server/bin/conf/目录下

kube-proxy.kubeconfig

6、创建ipvs脚本

vi /root/ipvs.sh

#!/bin/bash

ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs"

for i in $(ls $ipvs_mods_dir|grep -o "^[^.]*")

do

/sbin/modinfo -F filename $i &>/dev/null

if [ $? -eq 0 ];then

/sbin/modprobe $i

fi

done

7、执行ipvs脚本

chmod +x /root/ipvs.sh

. /root/ipvs.sh

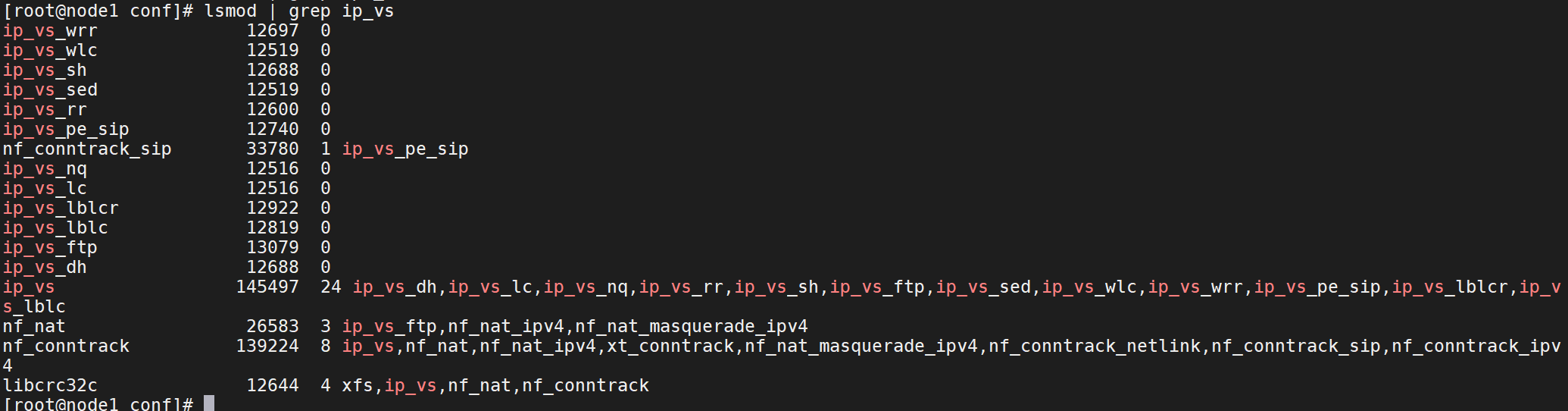

8、查看ipvs模块是否加载

lsmod | grep ip_vs

IPVS在内核中的负载均衡调度是以连接为粒度的。在HTTP协议(非持久)中,每个对象从WEB服务器上获取都需要建立一个TCP连接, 同一用户的不同请求会被调度到不同的服务器上,所以这种细粒度的调度在一定程度上可以避免单个用户访问的突发性引起服务器间的负载不平衡。

在内核中的连接调度算法上,IPVS已实现了以下十种调度算法:

轮叫调度(Round-Robin Scheduling)

加权轮叫调度(Weighted Round-Robin Scheduling)

最小连接调度(Least-Connection Scheduling)

加权最小连接调度(Weighted Least-Connection Scheduling)

基于局部性的最少链接(Locality-Based Least Connections Scheduling)

带复制的基于局部性最少链接(Locality-Based Least Connections with Replication Scheduling)

目标地址散列调度(Destination Hashing Scheduling)

源地址散列调度(Source Hashing Scheduling)

最短预期延时调度(Shortest Expected Delay Scheduling)

不排队调度(Never Queue Scheduling)

9、创建kube-proxy启动脚本

vi /opt/kubernetes/server/bin/kube-proxy.sh

#!/bin/sh

./kube-proxy \

--cluster-cidr 172.7.0.0/16 \

--hostname-override sevattal-21.host.com \

--proxy-mode=ipvs \

--ipvs-scheduler=nq \

--kubeconfig ./conf/kube-proxy.kubeconfig

10、授权、创建目录

chmod +x /opt/kubernetes/server/bin/kube-proxy.sh

mkdir -p /data/logs/kubernetes/kube-proxy

11、创建supervisor配置

vi /etc/supervisord.d/kube-proxy.ini

[program:kube-proxy-10-21]

command=/opt/kubernetes/server/bin/kube-proxy.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

12、启动服务并检查

supervisorctl update

supervisorctl status

13、安装ipvsadm

yum install ipvsadm -y

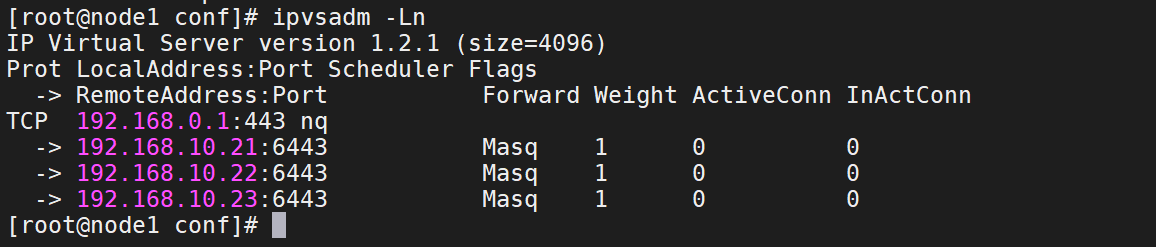

14、使用ipvsadm命令查看路由配置

ipvsadm -Ln

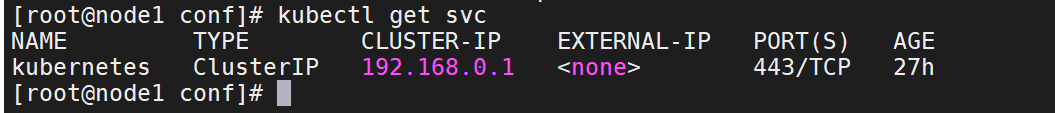

15、使用kubectl命令查看

kubectl get svc

验证kubernetes集群

1、在node1中创建一个资源配置清单

vi /root/nginx-ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ds

spec:

template:

metadata:

labels:

app: nginx-ds

spec:

containers:

- name: my-nginx

image: harbor.example.com/public/nginx:latest

ports:

- containerPort: 80

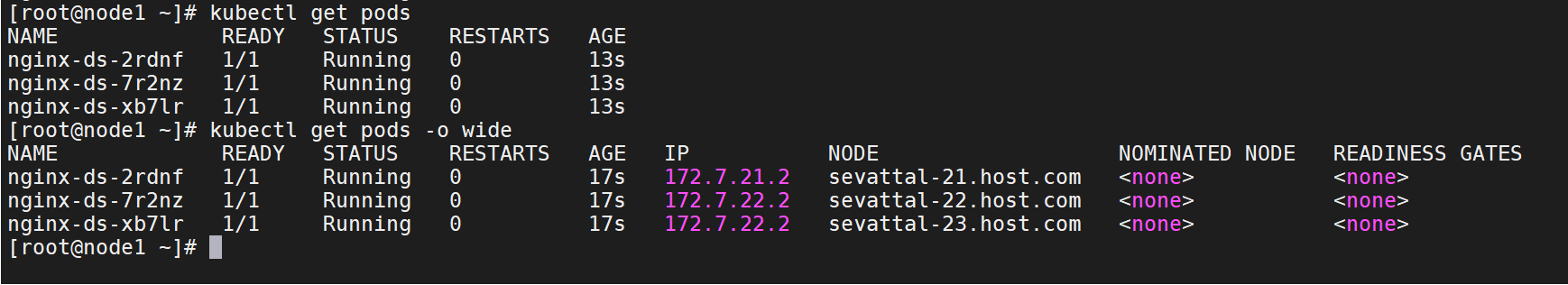

2、应用资源配置,并检查

在node1中应用资源配置

kubectl create -f /root/nginx-ds.yaml

在node1,2,3中检查

kubectl get pods

kubectl get pods -o wide

每次重启集群需要启动的地方

检查配置并启动bind 9

named-checkconf

systemctl start named

netstat -lntup|grep 53

启动nginx,链接harbor

/opt/harbor/install.sh

nginx -t

systemctl start nginx

systemctl enable nginx

四层反向代理

systemctl start nginx keepalived

systemctl enable nginx keepalived

netstat -lntup|grep nginx

ip addr